DALL-E is already proving to be a stepping stone in AI research. Its novelty lies in the way it was trained – with both text and vision stimuli – unlocking promising new directions of research in a re-emerging field called multimodal AI.

Last January, OpenAI published its latest model DALL-E, a 12-billion parameter version of the natural language processing model GPT-3, generating images rather than text.

Recently DALL-E’s creators have been signaling they will soon open access to DALL-E’s API. Undeniably, this API release will produce various innovative applications. But even before that, DALL-E is already proving to be a stepping stone in AI research. Its novelty lies in the way it was trained – with both text and vision stimuli, unlocking promising new directions of research in a re-emerging field called multimodal AI.

Advanced neural network architecture

DALL-E is built based on the transformers architecture, an easy-to-parallelize type of neural network that can scale up and be trained on enormous datasets. The model receives both the text and the image as a single stream of data and is trained using maximum likelihood to generate all of the subsequent tokens (i.e., pixels), one after another. To train it, OpenAI created a dataset with 400 million image-text pairs of unlabeled data collected from the internet.

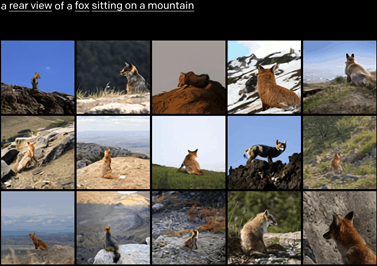

The model demonstrated impressive capabilities. For example, based on the following textual prompt, it was able to control the viewpoint of a scene and the 3D style in which it was rendered:

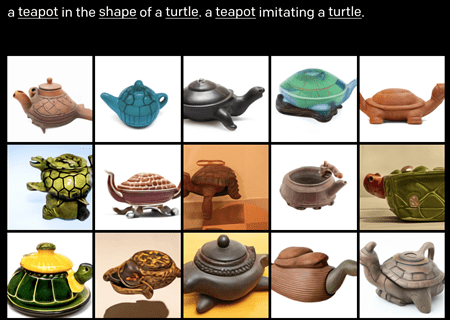

In another example, it has generated objects synthesized from a variety of unrelated ideas while respecting the shape and form of the object being designed:

These images are 100% synthetical. Unfortunately, with the lack of a standardized benchmark to measure its performance, it’s hard to quantify how successful this model is in comparison to previous GANs and future image generation models.

Not there, yet

The way DALL-E works is that it generates 512 images for each textual prompt. Then, these results are being ranked by another model from OpenAI called CLIP. In short, CLIP can automatically describe images based on their content. It also takes into account textual features, similar to how we humans do. Hence, if it sees an image of an apple with the written word ‘Orange,’ it might label it as an orange even though it’s an apple.

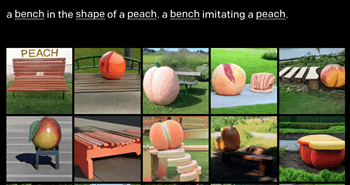

For the following prompt of a “bench in the shape of a peach,” DALL-E generated a picture of a bench with the word peach (top-left corner):

This shows multimodal models such as DALL-E are still prone to bias and typographic attacks. Both topics were explored in OpenAI’s follow-up blog post, where they described the promises and shortcomings of this technology.

Human-like intelligence

Today there is a shared understanding in the AI community that using narrow AI will not get us to human-like performance across different domains. For example, even the state-of-the-art deep learning model for early-stage cancer detection (vision) is limited in its performance when it is missing a patient’s charts (text) from her electronic health records.

This perception is becoming increasingly popular, with Oreilly’s recent Radar Trends report marking multimodality as the next step in AI and other domain experts such as Jeff Dean (Google AI SVP) sharing a similar view.

On the promise of combining language and vision, OpenAI’s Chief Scientist Ilya Sutskever stated that in 2021 OpenAI would strive to build and expose models to new stimuli: “Text alone can express a great deal of information about the world, but it’s incomplete because we live in a visual world as well.” He then adds, “this ability to process text and images together should make models smarter. Humans are exposed to not only what they read but also what they see and hear. If you can expose models to data similar to those absorbed by humans, they should learn concepts in a way that’s more similar to humans”.

Multimodality has been explored in the past and has been picking up interest once again in the last several years, with promising results such as Facebook’s AI Research lab recent paper in the field of automatic speech recognition (ASR), showcasing major progress by combining audio and text.

Final thoughts

In just less than a year since GPT-3’s release, OpenAI is about to release DALL-E as its next state-of-the-art API. This, along with the GPT and CLIP models, gets OpenAI one step closer to its promise of building sustainable and safe artificial general intelligence (AGI). It also creates endless new streams of research surpassing the previous levels of AI performance.

One thing is certain–once released, we can all expect our Twitter feed to be filled with mind-blowing applications, just this time with artificially generated images rather than artificially generated text.