The Pitfalls of Siloed AI Implementations

A common mistake industrial organizations make is deploying AI solutions in a one-off fashion, addressing isolated problems without considering broader scalability. For example, a manufacturing plant might implement an AI-based anomaly detection system for a single production line but fail to integrate similar capabilities across other facilities. Likewise, an oil and gas company may deploy AI for equipment monitoring at a single refinery but not across the entire supply chain.

This fragmented approach results in a patchwork of AI solutions that do not communicate or share insights across the organization. In addition to duplicating effort and costs, it creates data silos that limit the effectiveness of AI-driven decision-making. Furthermore, a lack of standardization in AI applications makes it difficult to scale innovations, forcing organizations to reinvent solutions for different use cases repeatedly. To maximize AI’s impact, companies must move beyond these one-off implementations and adopt a cohesive, enterprise-wide AI strategy.

The Need for Uniform Data Analytics and AI Practices

To start, scaling AI successfully requires a unified approach to data management and analytics. To accomplish that, industrial organizations must standardize data collection, storage, and processing across all departments and facilities. Without consistent data practices, AI models struggle to analyze information effectively, leading to inaccurate or misleading insights.

The benefit of a uniform approach is that it ensures that all AI models operate on high-quality, structured data, leading to more reliable predictions and recommendations. Standardization also improves collaboration between different teams, allowing them to leverage shared AI insights rather than working in isolated pockets of innovation. Likewise, standardized AI data practices simplify compliance with regulatory requirements, ensuring data security and governance are maintained across the organization.

Obstacles to Overcome: Accessing and Understanding Siloed Data

As organizations seek to scale AI across their operations, access to high-quality, contextualized industrial data becomes a critical success factor. Without a trustworthy data foundation, AI models risk being built on fragmented or outdated information, leading to unreliable insights and poor decision-making. A unified data foundation, where there is one source of truth, ensures that all AI applications and analytics operate on consistent, accurate data. This foundation eliminates inconsistencies that can arise from disparate data sources, allowing organizations to leverage AI with confidence and drive operational efficiencies at scale.

As noted, a major challenge in scaling AI is overcoming the limitations of closed, siloed data platforms. Many organizations still rely on proprietary data systems that restrict interoperability, making it difficult to integrate information across departments and functions. Open data platforms, on the other hand, provide the flexibility needed to aggregate and analyze data from multiple sources. By eliminating these silos, organizations enable AI models to draw from a comprehensive dataset, improving their accuracy and effectiveness. Open platforms also support collaboration across teams, allowing AI-driven insights to be shared and acted upon across the enterprise.

Beyond data availability, contextualization is essential for AI to generate meaningful insights. Raw industrial data often lacks the necessary metadata, relationships, and structure needed for AI models to interpret it correctly. Contextualization, which is the linking of data to specific assets, processes, and operational conditions, helps AI understand not just what is happening but why. This is particularly important in industrial settings, where sensor readings, maintenance logs, and process parameters must be analyzed in context to generate actionable recommendations. Without this level of detail, AI models may struggle to provide reliable predictions or automation.

Data quality is another key consideration when scaling AI. Poor-quality data, whether due to gaps, inconsistencies, or errors, can lead to misleading AI outputs and operational inefficiencies. Ensuring data accuracy, completeness, and timeliness is essential to maintaining AI reliability. A strong DataOps strategy plays a crucial role in maintaining data quality by implementing automated data validation, cleansing, and governance processes. Additionally, DataOps ensures continuous data updates, allowing AI models to work with real-time or near-real-time information that reflects current conditions. This enables organizations to deploy AI solutions that adapt dynamically to changing operational environments, enhancing their ability to drive efficiency, reduce downtime, and improve decision-making.

By establishing a robust data infrastructure, breaking down silos, contextualizing information, and prioritizing data quality, organizations can successfully scale AI across their operations. A well-executed DataOps strategy ensures that AI models are always working with fresh, accurate, and meaningful data, unlocking new opportunities for automation, optimization, and innovation. In an era where AI-driven insights are becoming a competitive differentiator, access to industrial data is no longer a luxury; it is a necessity.

The Value of Training LLMs on Company-Owned Data

A key factor to achieving success with scaling AI is the ability to leverage corporate data, especially for training AI models. To that end, Large Language Models (LLMs) must be trained on company-owned data. Why? Industrial environments generate vast amounts of unique operational data that hold insights crucial for improving efficiency, safety, and decision-making.

Relying on generic AI models trained on publicly available datasets often fails to deliver precise and context-aware results. By training LLMs on proprietary data, organizations can develop AI systems that understand specific operational nuances, equipment behaviors, and workflow requirements. This enables AI-driven insights tailored to real-world industrial challenges, ultimately leading to better outcomes and increased return on investment (ROI).

Moreover, proprietary data-driven LLMs enhance knowledge retention and transfer within the organization. Many industrial companies struggle with the loss of institutional knowledge when experienced employees retire or leave. AI trained on internal data can help capture expertise and make it accessible to new employees, ensuring operational continuity and reducing onboarding time.

Bringing Best Practices and Technology to the Fore

For AI to deliver value at scale, industrial organizations must establish and promote best practices that enable seamless AI adoption across all operations. This includes developing clear AI governance frameworks, implementing explainable AI (XAI) to build trust among stakeholders, and fostering a data-driven culture that encourages employees to engage with AI tools.

Additionally, companies must invest in AI-ready infrastructure, such as cloud-based data platforms and industrial knowledge graphs, to ensure seamless integration of AI technologies. Without a strong technological foundation, AI efforts remain fragmented and difficult to scale. Businesses should also prioritize upskilling employees, providing training programs that equip workers with the skills necessary to interpret AI-generated insights and make data-driven decisions.

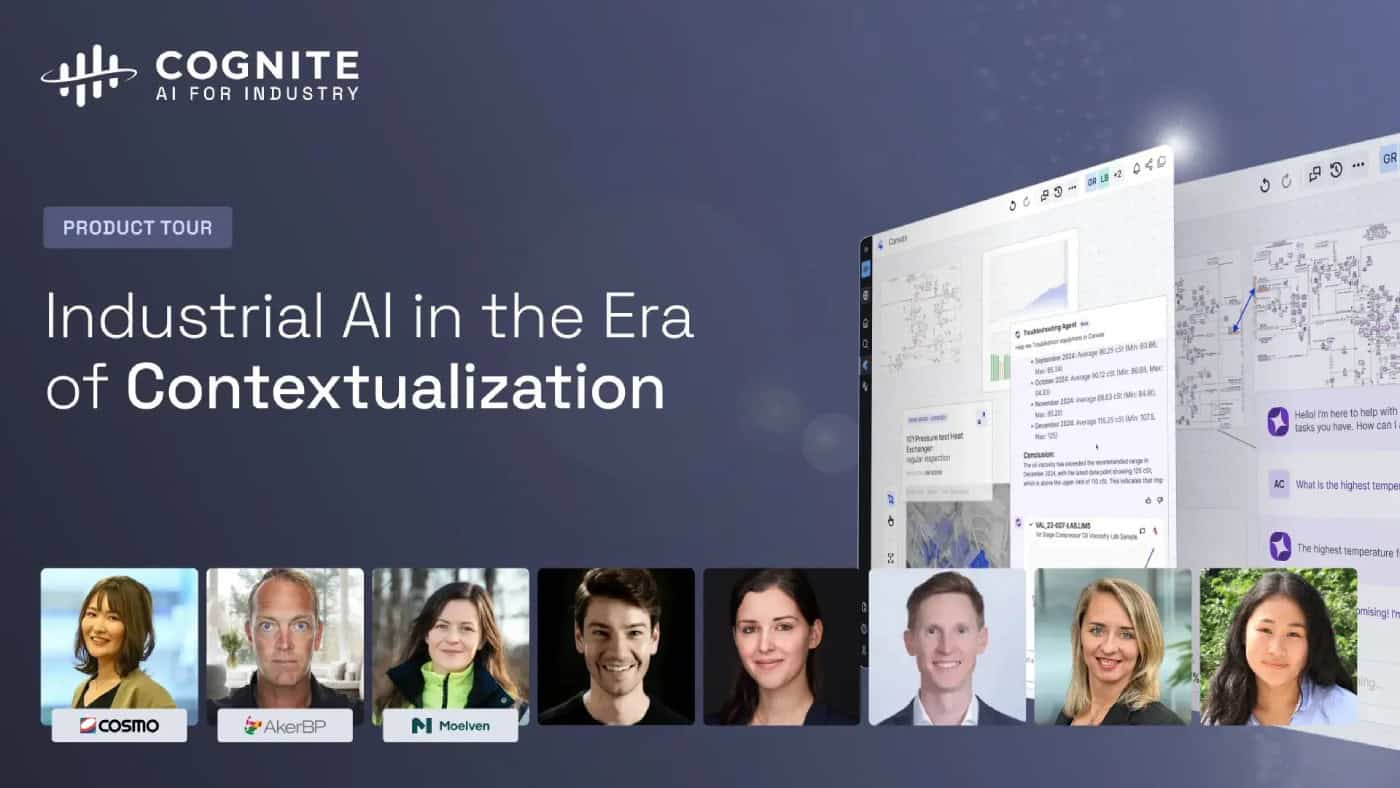

The Role of AI Agents in Industrial Decision-Making

As organizations scale their AI efforts, many are focusing on AI agents. Such agents are playing an increasingly vital role in industrial decision-making by automating complex processes, analyzing vast amounts of data, and providing actionable insights in real time. These agents can monitor production lines, predict equipment failures, and optimize supply chains, significantly enhancing efficiency and reducing operational risks.

However, despite their growing capabilities, AI agents do not operate in isolation—human oversight remains essential. Engineers, operators, and decision-makers work alongside AI to validate recommendations, intervene in critical situations, and provide strategic direction. This human-in-the-loop approach ensures that AI-driven decisions align with business objectives, regulatory requirements, and real-world constraints that algorithms may not fully comprehend.

Additionally, to maximize the impact of AI in industrial environments, organizations must move beyond single-task AI agents and adopt a broader operations automation mindset. Traditional AI applications are often built to handle isolated tasks, such as detecting anomalies in sensor data or optimizing energy consumption. While these use cases provide value, they do not unlock the full potential of AI-driven automation. Instead, organizations should focus on orchestrating multiple AI agents to work together across entire workflows, integrating insights from maintenance, production, logistics, and supply chain operations. By interconnecting agents, industries can achieve more holistic automation, where AI dynamically adapts to changing conditions and drives efficiencies across the entire value chain.

Human interaction with AI agents must evolve alongside this shift. Rather than simply reacting to AI-generated insights, industrial teams should engage with AI in a supervisory and strategic capacity, setting automation goals, refining AI models, and interpreting complex outcomes. This requires intuitive human-AI interfaces that enable clear communication, explainability, and trust. AI agents should not only provide recommendations but also justify their reasoning in a way that makes sense to human operators, allowing for better decision-making. As AI systems grow more sophisticated, businesses must also invest in upskilling their workforce, ensuring that employees can effectively collaborate with AI to unlock its full potential.

By embracing an operations automation mindset and fostering seamless human-AI collaboration, industrial organizations can move beyond fragmented AI deployments toward fully integrated, autonomous decision-making systems. This approach not only enhances efficiency and scalability but also ensures that AI remains a tool for augmenting human expertise rather than replacing it. In the end, the most successful industrial AI strategies will be those that balance automation with human oversight, leveraging AI to drive smarter, faster, and more resilient decision-making across the enterprise.

AI Agent Use Cases

As organizations embrace AI and AI agents and scale their use, several horizontal use cases show great potential to deliver on the promise of AI.

One is to support AI-driven troubleshooting. As industrial operations become increasingly complex, organizations must find ways to troubleshoot problems more quickly and efficiently. Traditional methods, such as predictive maintenance, manual log reviews, and experience-based problem-solving, are no longer sufficient in the face of large-scale data generation and the growing need for rapid decision-making. With data streams coming from IoT sensors, industrial control systems, and other sources, engineers and operators must sift through vast amounts of information to pinpoint issues.

To address this challenge, industrial organizations find that a centralized, AI-driven troubleshooting workspace can offer great help. Such a platform consolidates real-time sensor data, historical trends, maintenance logs, technical documentation, and other essential information into a single, easy-to-navigate interface. By leveraging generative AI (GenAI), engineers can quickly retrieve relevant insights by asking natural language questions instead of manually searching databases. This approach significantly reduces troubleshooting time, enabling teams to act swiftly and with greater confidence.

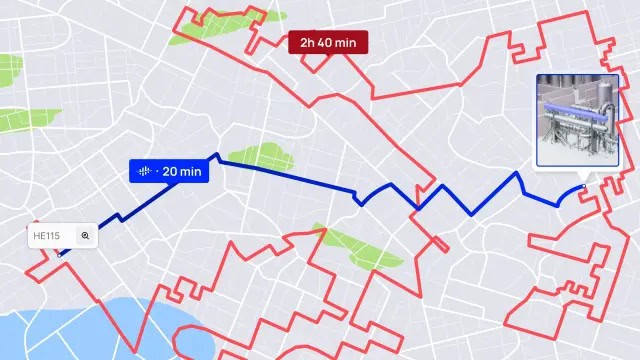

A second common use case is Root Cause Analysis (RCA). RCA is essential for maintaining operational efficiency and safety. However, traditional RCA methods often face challenges such as dispersed data sources, fragmented collaboration, and limited access to historical analyses, leading to inefficiencies and prolonged downtime.

AI helps with RCA in several ways. First, AI-enabled data access allows users to interact with engineering diagrams to quickly access related data, including time series charts, P&ID drawings, and 3D models, all contextualized within an industrial knowledge graph. AI-assisted document summarization and Natural Language queries via AI chatbots enable intelligent document searches and provide natural language summaries, reducing manual efforts in data retrieval. Using such AI capabilities streamlines the RCA process, enabling engineers to focus on critical analysis and implement effective solutions promptly.

In addition to these broad applications, there are numerous industry-specific use cases, including:

- In the chemical industry, AI agents can monitor and control chemical processes in real time, minimizing risks associated with equipment failures, leaks, or hazardous reactions.

- In the manufacturing sector, AI agents can help facilitate the use of predictive maintenance by constantly monitoring equipment health and predicting when failures are likely to occur.

- In the energy industry, AI agents can improve grid management, optimize energy distribution, and enhance asset performance.

A Final Word on Scaling AI

Scaling AI effectively requires a strategic, holistic approach. By adopting best practices in data access and the use of advanced AI, an organization can transition from a situation where AI holds immense promise to one where it drives the success of the organization.

Businesses must move beyond isolated AI applications and implement uniform data and AI practices across the enterprise. They must standardize analytics practices to ensure consistency and scalability. By bringing best practices and robust technology to the forefront, industrial organizations can fully capitalize on their data assets and realize the full potential of AI at scale. With a well-planned AI strategy, companies can drive innovation, improve operational efficiency, and maintain a competitive edge in an increasingly digitalized industrial landscape.