The AI Ladder, along with CRISP-DM, provides a strategic approach to creating CI applications on real time data.

As described in The Case for Continuous Intelligence, CI applications use the widest range of artificial intelligence, including both machine learning and deep learning, to deliver unsurpassed value. But how do you build, maintain, and manage AI? The AI Ladder provides principles to modernize your information architecture and speed your journey to AI, and includes four distinct stages:

- Collect: Make data simple and accessible

- Organize: Create a business-ready analytics foundation

- Analyze: Build and scale AI with trust and transparency

- Infuse: Operationalize AI throughout the business

Streaming engines need capabilities to simplify these stages. Collecting structured and unstructured data and cleansing it as it comes in is crucial to ensure you don’t have dirty data leading to erroneous recommendations. Organizing with well-understood data definitions and catalogs. Creating trusted analytics models using various tools and languages. Finally, infusing AI into your CI applications.

See also: How AutoAI Accelerates AI Adoption

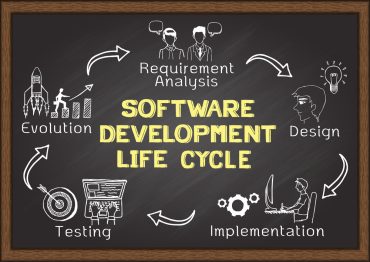

The Cross-Industry Standard Process for Data Mining (CRISP-DM) has been used for many years to help build analytic models. The life cycle model provides six stages to guide data mining efforts. What’s cool is this same model can be applied to your CI apps using streaming engines. You will need to keep a few things in mind as you apply this to your CI apps.

How do you handle missing, erroneous, and mis-timed data?

Just as CRISP-DM has a stage for data preparation, your CI app must prepare the data in real time. This could include enriching data, reformatting data, and correcting missing or erroneous data.

One of the biggest problems that data scientists face is dealing with missed sensor reads. This could happen due to short-term equipment malfunction or communications errors. For example, geospatial information from vehicles is prone to errors in between buildings or in tunnels. The streaming engine needs to interpolate missing data using several methods. It could simply repeat the last known value or use an AI model like a moving average regression to calculate missing data.

Another issue to consider is whether one time series of data could impact another time series. For data center monitoring, does database response time impact overall application response time? If the sampling rate varies between sensors, it’s good to have an easy way to resample the data. That is, change the data from 500 readings per second to 100 per second. Or upsample from 50 readings per second to 100 per second. The two time series can then be directly compared.

How quickly should models be updated?

Some models can be built and dynamically updated using streaming data. Other models require training data stored in a database. These models may drift, so you need to retrain them for best results.

For models built directly on streaming data, they read in a number of records to create the model, and then as more data arrives, the model is continuously updated. IBM Streams in Cloud Pak for Data includes about 20 of these.

Continuously updated models are wonderful for some classes of problems since the data is continuously changing. For example, sunlight and temperature changes throughout the year, so forecasting electric usage needs a model that is also gradually changing. Anomaly detection in process manufacturing is another example. Processes in oil refineries must be adjusted as raw materials change subtly, or as components fail.

However, for many other classes of problems, you need to start with training data—that is, some expert has identified records to train the model. Fraudulent transactions fit this category. Deep learning models for vision or acoustics also fit this pattern. With both, you need a training set where the records are identified as fraudulent or as an accurate visual pattern (e.g., a dog vs. a wolf). The model is created from the training data and then loaded into a streaming engine to score records in real time.

Models will drift over time, as business processes change, or as the data changes, so there must be continuous evaluation. There are two approaches to updating the models. Periodically or on demand. If the rate of drift is well known, models might be scheduled for updates on a weekly or biweekly basis. It’s also possible to monitor the scores and observe the average confidence levels changing. When they have drifted a significant amount, a model update can be immediately scheduled.

A caveat about continuously updated models

Business processes that require strict governance are not well suited to continuously updated models. When making customer recommendations like loan processing, banks must be able to explain the recommendation and decision at a specific point in time. A tool like IBM Watson Open Scale enables customers to explain what’s happening and why a recommendation was made.

If you are receiving hundreds of thousands or millions of events per second, and continuously updating the model, it’s impossible to save copies of the exact model in use when a recommendation was made. Let alone synchronizing the model to a specific recommendation. So in these use cases, models must be built offline, and only scored in your CI applications. This allows you to know the version of a model in use at a particular time.

How do you reload the models?

Since the goal is to have continuous intelligence applications, it’s important to have a streaming engine that updates models without stopping applications. One approach is for the scoring operator to receive control signals that indicate a new model is available. The scoring puts back pressure on the incoming data and then loads the updated model. After the model is updated, the back-pressure signal is removed, and data continues flowing through the application.

Summary

Streaming analytics plays a crucial role in helping organizations operationalize AI. The AI Ladder, along with CRISP-DM, provides a strategic approach to creating CI applications on real time data. Having the right tools can speed development, testing, and operations—whether you are calculating missed data, updating your models, evaluating model drift, or reloading models in real time. Leverage the power of streaming analytics to modernize your data architecture and operationalize AI across your business.

Read Roger Rea’s other Real Talk articles: