Burgeoning data flowing from industrial sensors, devices and machines can help companies realize operational efficiency gains. But as RTInsights contributor Dan Potter explains, companies will only reap these benefits if they can gain insights in real-time, as they happen.

In asset-intensive industries, thousands of sensors and controllers continually measure, report and record the pulse of the real world: temperatures, pressures, flaws and vibrations—often at sub second intervals—and time-stamp the data. The volume, variety and velocity of this operational technology (OT) data is so huge that calling it simply “Big Data” doesn’t really do it justice. For example, a typical wind farm may generate 150,000 data points per second. A smart meter project can generate 500 million readings of data daily. And weather analysis can involve petabytes of data a day.

Information flowing from the industrial Internet of Things (IoT) requires in-the-moment understanding of what’s important and why. The further in time that understanding moves away from the generation of the information, the less opportunity you have to make important corrections or changes. To this point, however, the huge volumes of data collected from the IoT have simply been stored, often never to be touched again.

The IT/OT Integration Challenge

OT takes on a number of forms. Some OT monitors technical parameters and ensures safe operation of complex machines (such as those in power plants, offshore drilling rigs and aircrafts), reporting or even shutting down equipment that is in danger of malfunctioning. Other OT includes event-driven software applications or devices with embedded software, or systems that manage and control mission-critical production and delivery processes. Usable OT doesn’t even necessarily have to come from a company’s own operations; if a company delivers equipment enabled with OT, the dark data may actually come from its customers.

The most basic obstacle to extracting value from this OT-generated data is connecting it to traditional Information Technology (IT) systems. This integration is problematic because IT and OT evolved in different environments and use different data types and architectures. While IT evolved from the top down, primarily focusing on serving business and financial needs, OT grew from the bottom up, with many different proprietary systems designed to control specific equipment and processes. IT systems are based on well-established standards that can integrate large volumes of information across different applications. In the world of OT, no single industry standard yet exists for Machine to Machine (M2M) connectivity.

Yet, despite these disparities, not only is the business case for integrating IT and OT compelling, it should be compulsory. With data security a growing concern, and sensors and controllers often being embedded within critical infrastructure, malicious hacking of the IoT is becoming a very serious threat. Exposing this information in real-time for active monitoring and management could greatly improve IoT security.

Further, combining historical operational data with real-time, OT data streaming from the IoT could dramatically transform asset-intensive industries such as energy, manufacturing, oil & gas, mining, utilities and healthcare, creating savings and growth opportunities through improved Asset Performance Management (APM) and product/service quality.

The good news is, some integration-easing standardization is starting to emerge. The Telecommunications Industry Association (TIA), which represents manufacturers and network suppliers, has proposed an M2M protocol standard, with the goal of rallying manufacturers around the standard. There is also a renewed interest from manufacturers in more generic standards such as the OPC Unified Architecture (OPC UA), which could enable IT/OT integration.

IoT Analytics in the Real World

Beyond impressive volumes and issues around system integration, it’s the relevance of time that really distinguishes the world of OT data from what has been traditionally called Business Intelligence (BI). Information flowing from sensors and embedded systems needs to be understood in real-time, as important events happen, and not sitting in some batch process waiting to hit a data warehouse.

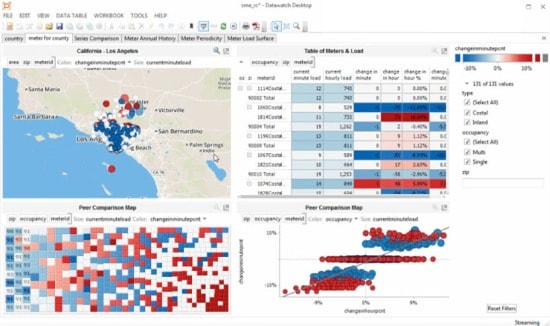

Seeing live, actionable information in an intuitive visual display precisely exposes how operations are doing in that instant. It gives new power directly to Line of Business (LOB) users who can see exactly what is happening as it occurs—and precisely understand how multiple systems or processes are performing.

Integrating streaming OT data with historical IT data in a visualization platform gives companies the ability to understand not only how systems are performing but also how that real-time performance compares to historical averages. When an outlier or anomaly is spotted, this OT data, combined with historical data, provides a comprehensive understanding for faster decision making. By using these real-time insights against historical context, alerts can be set to notify business users when certain conditions or thresholds are reached.

For example, they could monitor expensive pieces of equipment and shut them down when temperatures or pressures exceed certain thresholds that could lead to downtime. With the ability to understand real-time data against historical norms and averages, these shutdown alerts can be smarter, cutting the noise and inefficiency of simpler, less insightful monitoring approaches. Similarly, they can get smarter about when to schedule maintenance based upon a more nuanced understanding of real-world performance data.

Use Cases

Using this real-time approach, an equipment manufacturer that manages skids for transferring hydrocarbons in petroleum industries built a cloud-based, remote-monitoring system. Data collected from flow meters that monitor temperature in the skids is sent to the cloud and provided to customers as a service to help drive process improvements as well as to support predictive and preventative maintenance programs. The data also fuels financial processes for improved billing. This service avoided system degradation, which could have led to quality and cost issues (where a 1 percent error each day can add more than $1 billion of lost revenue per year).

Similarly, a global producer of cold storage solutions is able to monitor the temperature of assets such as store freezers and refrigerators in 4,500 supermarkets worldwide, analyzing thermostats, evaporators, fans and compressors to greatly reduce unplanned failures, reducing expensive downtime and service calls.

A railroad brake manufacturer was able to attach sensors to brakes installed in railroad trains. Aggregating this data with historical IT data enabled locomotive owners to better educate engineers as well as better manage the acceleration and braking of trains, in real-time, throughout thousand-mile trips. This insight helped train companies save up to 1 percent in fuel costs.

And, finally, delivery companies such as the United Parcel Service (UPS) and airlines and shipping firms are using industrial IoT analytics to monitor weather conditions, traffic patterns and vehicle locations to optimize constant routes, reducing wear and tear and fuel consumption while improving safety and on-time delivery services.

Conclusion

IoT analytics that integrate OT and IT data holds the potential to dramatically reduce the time to deliver business-critical information if it can be processed, understood and analyzed in real-time. As the cost of sensors and communications comes down, asset-intensive companies are finding themselves riding on perhaps what will soon be called the most transformational wave in business.

So many articles, so little time. Luckily, our content is edited for easy web reading! Read more:

Research from Gartner: Real-Time Analytics with the Internet of Things

From the Center to the Edge: The IoT Decentralizes Computing

Becoming an ‘Always On’ Smart Business

Urgency of Present and Past in IoT Analytics

Liked this article? Share it with your colleagues using the links below!