Apache Druid is designed for speed, scale, and streaming data, which makes it well-suited for use when analyzing sensor data.

As digital technologies seamlessly integrate into the fabric of our everyday lives, The Internet of Things (IoT) becomes increasingly important. As a catch-all category for a range of devices, including sensors, monitors, and other smart devices, IoT links the digital and the physical, two previously separate entities, so that data can be analyzed and processed to yield important insights.

The value of IoT data is felt across every sector of the economy, from manufacturing to logistics to retail. By harnessing telemetry and metrics from a range of sources, including drones, delivery trucks, medical devices, construction equipment, and security cameras, IoT data provides a real-time glimpse into the operational landscape.

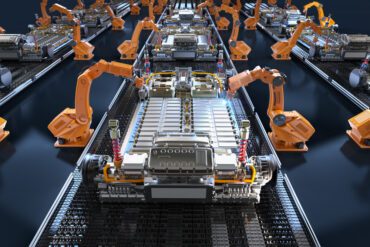

One example is solar panels: by detecting the sun’s movement across the sky and angling solar panels to follow, IoT sensors can help photovoltaic arrays generate maximum electricity. Smart meters on streetlights can seamlessly evaluate pedestrian traffic, dissect consumption, and adjust their brightness accordingly so that they use only the electricity they require. Lastly, intelligent assembly lines can quickly identify anomalously high temperatures and respond as needed, whether it’s stopping production immediately or activating fire suppression systems.

At its core, the value of IoT lies in its ability to extract actionable information and help teams respond. A better understanding of usage patterns helps a company anticipate changing consumer behavior, imagine the next evolution of their product, or automate routine (but critical) responsibilities.

See also: IoT Connections to Grow by 400% In Four Years

Requirements for IoT data

Still, IoT data can be challenging for organizations that are unprepared. The real-time nature of this data, combined with volume and varied formats (such as telemetry, metrics, and logs), adds much more complexity to any environment. To compound matters, this data usually arrives at speed and through massive streams, making it difficult to leverage this data before it becomes stale and outdated.

To fully harness the potential of IoT data, organizations need a database that can meet the following demands:

Enable rapid, real-time exploration across large datasets. Because IoT devices stream vast amounts of time-series data, any database for IoT has to be optimized for the collection, organization, storage, and analysis of this data type. However, many databases are not designed to deal with time-series data, lacking an efficient storage structure, key query features, or important capabilities such as densification or gap filling.

Instant availability for query and analysis. As it is so time sensitive, working with IoT data necessitates rapid response times. Any database has to quickly ingest data, make them accessible for analytics and queries, and provide sub-second responses regardless of user or query traffic.

Elastic scalability. Because IoT data volumes fluctuate so unpredictably, any database has to seamlessly and automatically scale. This agility should be achieved without the need for manual human intervention or service disruptions.

Reliability and durability. Because IoT devices operate nonstop, any database has to operate flawlessly without downtime or data loss. An efficient long-term storage layer is also imperative for managing the accumulation of IoT data over time.

Speed under load. Even as IoT datasets rise to the terabytes or petabytes, IoT databases cannot slow down. They must continue to deliver fast results on a consistent basis, despite increased user numbers and query rates.

Why Apache Druid for IoT applications

Apache Druid was designed for speed, scale, and streaming data. Natively compatible with technologies like Apache Kafka or Amazon Kinesis, Druid does not require external connectors or complex workarounds to ingest streaming data.

Druid also provides query on arrival, a critical advantage for dealing with perishable IoT data. Whereas conventional databases ingest events in batches and store them as files before users can finally access this data, Druid ingests streaming data by the event directly into memory at data nodes. This means that data can be queried right away, ensuring immediate access to key information.

Druid was also designed with an eye towards reliability and durability. Post-ingestion, events undergo processing and organization into columns and segments before being persisted into a deep storage layer, which also functions as a continuous backup. Should a node go down, workloads will be distributed across the remaining nodes, providing for uninterrupted access and guarding against possible data loss.

Druid also builds on the strengths of time-series databases, such as time-based partitioning and fast aggregations, with an extensive suite of analytics operations. Teams can seamlessly dissect their data across multiple dimensions, group data by parameters such as location or sensor type, and filter based on criteria such as IP address. This flexibility and versatility facilitate working with IoT data, enabling analysts and scientists to easily answer important questions.

Druid also reimagines the relationship between storage and compute for a smoother scaling experience. By splitting processes into master nodes (which provide data availability), data nodes (for storage), and query nodes (to execute queries and retrieve results), each node type can be scaled depending on needs. Druid’s deep storage further boosts data durability and scalability, ensuring that data remains accessible even as nodes are scaled up or down.

The Cisco ThousandEyes story

One compelling testimonial comes from Cisco ThousandEyes, the cloud intelligence division of the leading digital communications provider. ThousandEyes provides unparalleled visibility into network, application, cloud, and Internet performance for a wide range of Fortune 500 companies, top banks, and innovative companies such as Digital Ocean, Twitter, DocuSign, and eBay.

Much of today’s digital economy relies heavily on wired components such as network switches, wireless access points, and routers, all of which generate substantial streams of data. This streaming data is then ingested, analyzed, queried, and visualized for users, who can extract insights, compare current trends against historical performance, and explore their data in an open-ended manner, drilling down, filtering, or isolating by geographical areas.

In their early days, ThousandEyes initially used MongoDB, a transactional database. However, MongoDB could not effectively (or rapidly) execute in-depth analytics on massive amounts of IoT sensor data. Their use of MongoDB to support external analytics led to prolonged wait times (up to 15 minutes) for dashboards and queries, complicating monitoring and troubleshooting processes for users.

“Druid is basically the best ecosystem for handling large amounts of data,” Lead Software Engineer Gabe Garcia explained. “We have around five to 20 requests per second, and we have sub-second latency for most of our queries (our 98th percentile latency is about a second).”

After moving to Druid, ThousandEyes users found that dashboards were significantly faster. Equally important, they could now better understand their network performance across all devices and operating systems, enabling them to find and fix (or even proactively stop) network issues.