Centralized log management reduces tool sprawl by using the same data but allowing different teams to customize how they use it.

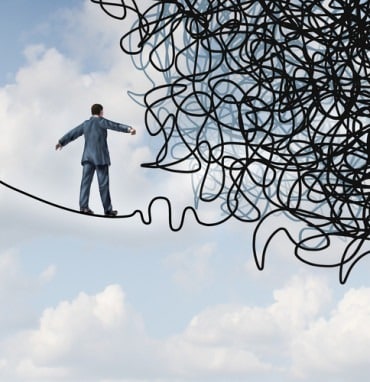

As companies evolve and grow, so do the number of applications, databases, devices, cloud locations, and users. Often, this comes from teams adding tools instead of replacing them. As security teams solve individual problems, this tool adoption leads to disorganization, digital chaos, data silos, and information overload. Even worse, it means organizations have no way to correlate data confidently. Reducing data log chaos is critical across the entire organization.

The vicious cycle: more problems, more tools, and yet more problems

With IT infrastructure increasing in complexity, gaining visibility becomes more difficult. In response to these challenges, organizations often turn to best-of-breed solutions. However, this approach comes with a different, possibly more costly, problem: log chaos.

For example, this “add a tool to solve a problem” creates disorganization and digital chaos, resulting in:

- Multiple applications in one environment

- Same tools only partially deployed throughout the organization

- Different departments using their own tools

- Data duplication across the organization

- Different configurations and legacy applications

Many organizations keep their operational and security monitoring tools separated because their teams work on different projects with different budgets and different leadership. In the end, this tool sprawl leads to technology and productivity costs by creating inefficiencies and data duplication.

At the end of the day, event logs are event logs. The data stays the same, so purchasing different tools for aggregating and correlating data based on these different use cases leads to tool sprawl.

For every tool, a new data silo appears

All of this chaos creates data silos. This chaos leaves security teams struggling to correlate data confidently.

For example, a networking team may be managing multiple firewalls using a network policy management tool. Meanwhile, the security team is trying to monitor traffic with an intrusion detection system. Even though both tools use network event log data, the organization now has a data silo.

Reducing log chaos is even more critical for security teams. If the log data is spread across too many locations or too siloed, the team will be unable to get the alerts they need, let alone correlate events. Without all the important data, abnormal activity indicating a potential security breach may go unnoticed.

Understanding the hidden costs

While tool sprawl costs the organization money, it also comes with three distinct hidden costs: feature duplication, data duplication and integrity, and lost productivity.

As individual teams build out their toolsets, the organization often finds that features overlap between them. This feature duplication costs the organization both from a financial and workforce resource perspective.

- The organization is paying two different vendors for the same capability, ultimately doubling the cost.

- Workforce members are configuring and deploying the same functionality twice, ultimately doubling the amount of time spent setting up the same capability.

Another consequence of feature duplication is data duplication. Multiple tools generating similar reports using their own native formats increase storage costs and make cross-tool data comparisons challenging.

Further, organizations migrating from one tool to another can impact data integrity, such as migrating from one anti-virus tool to another. The organization may end up with multiple sets of the same data or lose data during the transition.

Lost productivity

Tool sprawl decreases productivity when employees need to spend time on activities outside their job function. For example, for each tool, employees might spend time responding to support requests, deploying upgrades, working with a vendor, and training personnel.

If an organization only has two overlapping tools, the organization might not notice the lost productivity. However, as the organization scales, the number of tools using the same log data increases. Eventually, tool sprawl drives up the total cost of ownership (TCO), with the cost of maintaining shelfware and redundant tools being the most extravagant of them all.

Sometimes the best thing to do is clean out the technology tool shed. This means reviewing each tool to determine if it provides unique insight or if it’s still in use because “this is how we’ve always done things.”

One location, multiple use cases

With IT infrastructures increasing in complexity, gaining visibility into the data becomes more difficult.

Centralized log management is a key piece to robust operations and security monitoring strategies because organizations need the context that comes from correlating log data. It enables an organization to reduce costs by bringing all workflows into a single location. With all data in a central location, enterprises can get a granular overview of their current situation and keep only what they need. Centralized log management reduces tool sprawl by using the same data but allowing different teams to customize how they use it. This ultimately reduces the costs of the individual tools and the hidden costs by creating more efficient processes.