Scaling microservices with Kubernetes is a powerful approach to meet the growing demands of modern applications.

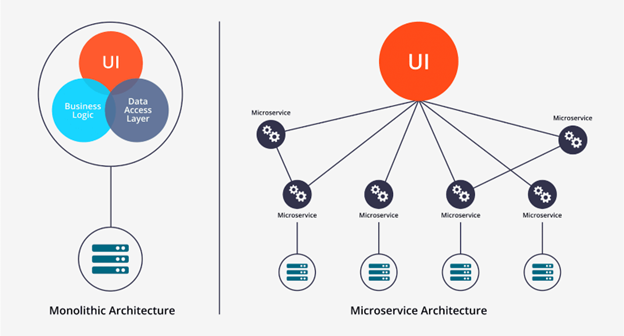

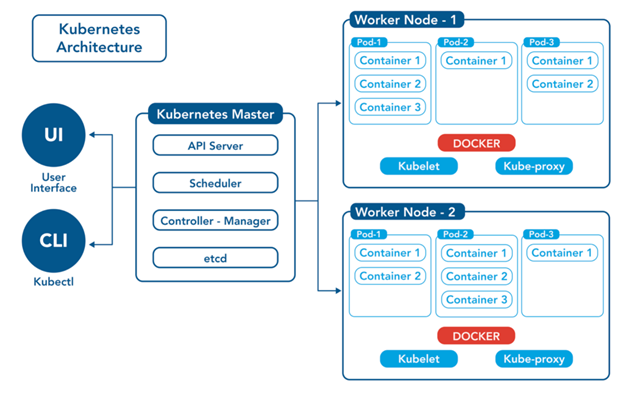

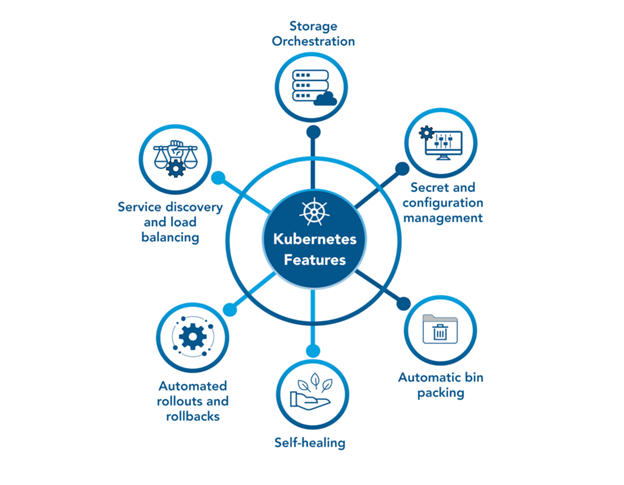

Kubernetes is an open-source container orchestration platform. It automates the deployment, management, and scaling of applications in distributed environments. It has an ecosystem of tools and integrations that aid in microservices deployment. A microservices architecture focuses on creating many independent services. They are a collection of small, loosely coupled services that can be deployed & scaled individually. Each microservice focuses on a specific business capability.

In this article, we will dive deeper into how Kubernetes enhances microservices and how to integrate them effectively.

How to Scale Microservices Correctly

Scaling microservices is crucial for ensuring optimal application performance and eliminating bottlenecks. Microservices have numerous options for scaling. We will expand on that later in the article.

Here are a few points that ensure that microservices are being scaled effectively:

- The software architecture and deployment strategies should be compatible with the environment.

- The architecture of the application stack should allow for up-scaling and down-scaling.

- After scaling, the whole swarm should work as a single unit.

- The Four Golden Signals help monitor resource usage and metrics. The signals are:

- Latency: This is the time taken to respond to a request. Monitoring latency helps to identify performance bottlenecks and also optimizes response time.

- Traffic: Traffic is the number of requests received in a given time. This signal helps us understand the load on the services and make informed scaling decisions.

- Errors: Errors are the rate of failing requests. It helps in identifying issues. After which you can take necessary actions to improve the reliability of services.

- Saturation: Saturation tells us how the services are being utilized. Monitoring saturation helps us determine if our services are running at capacity. Or if they need additional resources.

Best Practices for Scaling Microservices with Kubernetes

To maximize the potential of scaling microservices using Kubernetes, follow reliable methods. Here are the five main points to take note of.

1. Creating Scalable Service Designs

There should be provisions made for scalability while designing microservices. Automated scaling and appropriate sizing of virtual machines can meet any future needs.

The following points can make sure that the designed microservices are scalable:

Statelessness

A stateless service does not store any information about the application’s state. This ensures that no data is lost when Kubernetes scale the services.

Loose Coupling

The risk of cascading failures reduces when services have minimal dependencies on each other. Loose coupling also makes it easier to scale individual services.

API Versioning

API versioning is always prudent while creating updates. This will prevent overwriting of changes made by a service, and it will also allow for a smoother scaling process.

Service Discovery

Service discovery helps identify the location of the services being employed in Kubernetes. This helps prevent failures due to incorrect or outdated configuration settings.

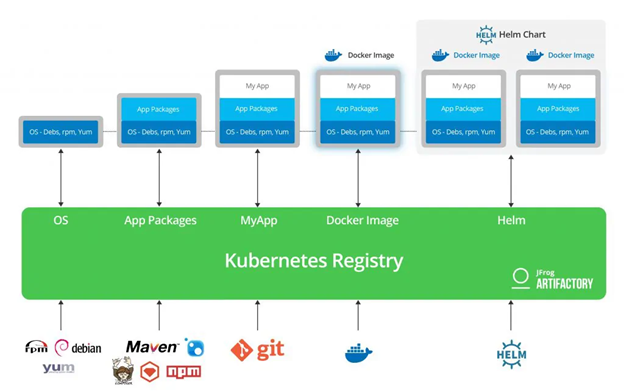

2. Utilizing the Kubernetes Registry Effectively

Kubernetes provides a built-in container registry that allows users to store container images. Using this registry efficiently will ensure consistent deployments across the infrastructure. This makes scaling the services easier.

Some best practices for utilizing the registry are:

Use a Private Registry

A private registry provides more security to your container images.

Optimize Image Sizes

The container images should be small and light. Remove unnecessary files and dependencies from the images to reduce the time taken to download and deploy your services.

Implement Image Security Scanning

It is vital to make sure that your services remain secure while scaling. The container images should be scanned for irregularities and vulnerabilities regularly.

Monitor and Automate

If there are changes in any container images, then they should automatically apply to all deployed services. A consistent application state is essential for the uninterrupted running of microservices.

3. Efficient Resource Monitoring and Management

A stable Kubernetes environment relies on effective resource management. Here are the best practices to keep in mind:

Resource Quotas

Each microservice should have a fixed quota of how many resources it can use at a given time. An overconsumption of resources may result in some other microservice stalling.

Autoscaling

Horizontal Pod Autoscalers (HPA) can help in autoscaling. HPA automatically scales the number of pods required based on how many resources are being used or by monitoring some custom metrics.

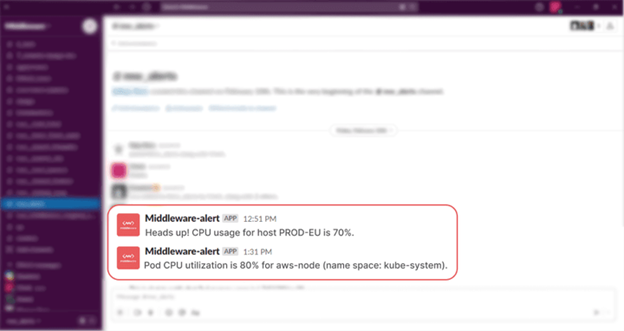

Monitor and Optimize

Periodically track how the services are performing. This is vital to identify bottlenecks and opportunities for optimization. Some metrics to measure and note are resource usage and response times. Consider utilizing an incident alerting system that can elevate critical alerts when a metric crosses its threshold.

4. Establishing a Reliable CI/CD Pipeline

As mentioned above, regular monitoring of the service is important for seamless functioning. The best way to stay on top of these services is by implementing a Continuous Integration/Continuous Deployment pipeline.

The following practices ensure the best performance out of the CI/CD system:

Automate Testing

Automated tests are much more efficient at identifying issues in real time. These tests need to be included at every stage of the pipeline. Testing is done to ensure that your services are reliable and scalable at all times.

Use Canary Deployments

Canary deployments (like beta testing) of new versions can be rolled out to a small group of users at first. Monitoring of these deployments helps in identifying potential issues.

Rollback Strategies

Sometimes a few issues do slip through despite monitoring and taking precautions. A rollback strategy to revert the services to a previous stable version may come in handy in these cases.

Version Control

To ensure that rollback is possible, version control of each new deployment needs to be implemented. A version control system keeps track of your code and changes over time.

Monitor Your Services

There are a number of monitoring tools like Datadog available that can send alerts when some issues arise. These alerts can aid in quick diagnosis and resolution of the problem.

5. Ensuring High Availability and Disaster Recovery

High availability and disaster recovery are two key components that aid microservices’ functionality. The following practices can help incorporate these two components into your workflow:

Multiple Availability Zones

Multiple availability zones are the zones where your services can be deployed. By deploying them across the infrastructure, you can reduce the risk of downtime. As there are alternate zones providing the same services, there will be no one point of failure.

Replicas

Multiple replicas of your services can make sure that they are always available.

Backup and Restore

There should always be a backup and restore strategy for all services. This may come in handy in the event of a disaster or a cascading failure.

Automated Failover

Automated failover of services happens when a backup instance takes over after the primary instance fails.

Testing

Testing of the architecture and processes is vital. The tests ensure that the infrastructure can withstand failure and recover quickly.

Scaling Your Microservices Using Kubernetes

Scaling of microservices can be done in many ways to serve different purposes. Using Kubernetes, they can be scaled to have a better output performance. Here are some of the common techniques for scaling your microservices using Kubernetes.

Vertically Scaling the Entire Cluster

In the case of vertical scaling, the size of the virtual machines in the node is increased, thereby scaling up the entire cluster. Instead of increasing the number of virtual machines, their sizes are ‘vertically’ increased. This gives them more computing capacity.

Horizontally Scaling the Entire Cluster

In this scaling method, additional virtual machines are added to the cluster. This increases the computing power and spreads the load of the application.

Horizontally Scaling Individual Microservices

When a microservice becomes overloaded with incoming requests, you can horizontally scale it. This distributes its load over multiple instances. This is achieved by replicating that particular microservice multiple times. The requests are then handled by multiple copies of the microservice.

Elastically Scaling the Entire Cluster

In this method, Kubernetes can automatically identify bottlenecks and rectify them, usually by allocating more resources to those services. Simultaneously, it can also deallocate resources that are not needed. Thus, ensuring efficient resource management and streamlined functioning of the services.

Elastically Scaling Individual Microservices

This scaling feature of Kubernetes dynamically adjusts resources based on demand. To enable elastic scaling, you need to define the scaling metrics or policies for each microservice. The two primary approaches of Kubernetes are:

Horizontal Pod Autoscaler (HPA): It automatically scales the number of replicas of a microservice based on CPU utilization or memory usage.

Vertical Pod Autoscaler (VPA): It focuses on adjusting the resource allocation for individual microservice pods based on their usage patterns.

Summary

Scaling microservices with Kubernetes is a powerful approach to meet the growing demands of modern applications. Scaling often requires risky configuration changes to the cluster. So, it is best to try it out on a new cluster or use blue-green deployment to protect users.

You can build a scalable and resilient infrastructure by using best practices such as creating scalable service designs, utilizing the Kubernetes registry effectively, resource monitoring and management, establishing a reliable CI/CD pipeline, and ensuring high availability.

Kubernetes provides you with effective microservice scaling methods. Thereby delivering a seamless, high-performing user experience.

Jinal Lad Mehta also contributed to this article.

Mehta is a digital marketer at Middleware AI-powered cloud observability tool. She is known for writing creative and engaging content. She loves to help entrepreneurs get their message out into the world. You can find her looking for ways to connect people, ideas, and products.