Data annotation takes time. And for in-house teams, labeling data can be the proverbial bottleneck, limiting a company’s ability to quickly train and validate machine learning models.

By its very definition, artificial intelligence refers to computer systems that can learn, reason, and act for themselves, but where does this intelligence come from? For decades, the collaborative intelligence of humans and machines has produced some of the world’s leading technologies. And while there’s nothing glamorous about the data being used to train today’s AI applications, the role of data annotation in AI is nonetheless fascinating.

See also: New Tool Offers Help with Data Annotation

Poorly Labeled Data Leads to Compromised AI

Imagine reviewing hours of video footage – sorting through thousands of driving scenes, to label all of the vehicles that come into frame, and you’ve got data annotation. Data annotation is the process of labeling images, video, audio, and other data sources, so the data is recognizable to computer systems programmed for supervised-learning. This is the intelligence behind AI algorithms.

For companies using AI to solve world problems, improve operations, increase efficiencies, or otherwise gain a competitive edge, training an algorithm is more than just collecting annotated data, it’s sourcing superior quality training data and ensuring that data is contributing to model validation, so applications can be brought to market quickly, safely, and ethically.

Data is the most crucial element of machine learning. Without data annotation, computers couldn’t be trained to see, speak, or perform intelligent functions, yet obtaining datasets, and labeling training data are among the top limitations to adopt AI, according to the McKinsey Global Institute. Another known limitation is data bias, which can creep in at any stage of the training data lifecycle, but more often than not occurs from poor quality or inconsistent data labeling.

The IDC shared that 50 percent of IT and data professionals surveyed report data quality as a challenge in deploying AI workloads, but where does quality data come from?

Open-source datasets are one way to collect data for an ML model, but since many are curated for a specific use case, it may not be useful for highly specialized needs. Also, the amount of data needed to train your algorithm may vary based on the complexity of the problem you’re trying to solve, and the complexity of your model.

The Waymo Open Dataset is the largest, most diverse autonomous driving dataset to date, consisting of thousands of images labeled with millions of bounding boxes and object classes—12 million 3D bounding box labels and 1.2 million 2D bounding box labels, to be exact. Still, Waymo has plans to continuously grow the size of this dataset even further.

Why? Because current, accurate, and refreshed data is necessary to continuously train, validate, and maintain agile machine learning models. There are always edge cases, and for some use cases, even more data is needed. If the data is lacking in any way, those gaps compromise the intelligence of the algorithm in the form of bias, false positives, poor performance, and other issues.

Let’s say you’re searching for a new laptop. When you type your specifications into the search bar, the results that come up are the work of millions of labeled and indexed data points, from product SKUs to product photos.

If your search returns results for a lunchbox, a briefcase, or anything else mistaken for the signature clamshell of a laptop, you’ve got a problem. You can’t find it, so you can’t buy it, and that company just lost a sale.

This is why quality annotated data is so important. Poor quality data has a direct correlation to biased and inaccurate models, and in some cases, improving data quality is as simple as making sure you have the right data in the first place.

Vulcan Inc., experienced the challenge of diversity in their dataset first-hand while working to develop AI-enabled products that could record and monitor African wildlife. While trying to detect cows in imagery, they realized their model could not recognize cows in Africa, based on their dataset of cows from Washington, alone. To get their ML model operating at peak performance, they needed to create a training dataset of their own.

Labeling Data, Demanding for AI Teams

As you might expect, data annotation takes time. And for in-house teams, labeling data can be the proverbial bottleneck, limiting your ability to quickly train and validate machine learning models.

Labeling datasets is arguably one of the hardest parts of building AI. Cognilytica reports that 80 percent of AI project time is spent aggregating, cleaning, labeling, and augmenting data to be used in machine learning models. That’s before any model development or AI training even begins.

And while labeling data is not an engineering challenge, nor is it a data science problem, data annotation can prove demanding for several reasons.

The first is the sheer amount of time it takes to prepare large volumes of raw data for labeling. It’s no secret, human effort is required to create datasets, and sorting irrelevant data from the desired data is a task in and of itself.

Then, there’s the challenge of getting the clean data labeled efficiently and accurately. A short video could take several hours to annotate, depending on the object classes represented and their density for the model to learn effectively.

An in-house team may not have enough dedicated personnel to process the data in a timely manner, leaving model development at a standstill until this task is complete. In some cases, the added pressure of keeping the AI pipeline moving can lead to incomplete or partially labeled data, or worse, blatant errors in the annotations.

Even in instances where existing personnel can serve as the in-house data annotation team, and they have the training and expertise to do it well, few companies have the technology infrastructure to support an AI pipeline from ingestion to algorithm, securely and smoothly.

This is why organizations lacking the time for data annotation, annotation expertise, clear strategies for AI adoption, or technology infrastructure to support the training data lifecycle partner with trusted providers to build smarter AI.

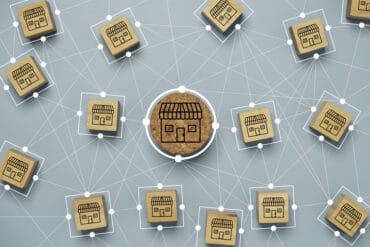

To improve its retail item coverage from 91 to 98 percent, Walmart worked with a specialized data annotation partner to evaluate their data and ensure its accuracy to train Walmart systems. With more than 2.5 million items cataloged during the partnership, the Walmart team has been able to focus on model development, rather than aggregating data.

How Data Annotation Providers Combine Humans and Tech

Data annotation providers have access to tools and techniques that can help expedite the annotation process and improve the accuracy of data labeling.

For starters, working day in and day out with training data means these companies see a range of scenarios where data annotation is seamless and where things could be improved. They can then pass these learnings on to their clients, helping to create effective training data strategies for AI development.

For organizations unsure of how to operationalize AI in their business, an annotation provider can serve as a trusted advisor to your machine learning team—asking the right questions, at the right time, under the right circumstances.

A recent report shared that organizations spend 5x more on internal data labeling, for every dollar spent on third-party services. This may be due, in part, to the expense of assigning data scientists and ML engineers labeling tasks. Still, there’s also something to be said about the established platforms, workflows, and trained workforce that allow annotation service providers to work more efficiently.

Working with a trusted partner often means that the annotators assigned to your project receive training to understand the context of the data being labeled. It also means you have a dedicated technology platform for data labeling. Over time, your dedicated team of labelers can begin to specialize in your specific use-case, and this expertise results in lower costs and better scalability of your AI programs.

Technology platforms that incorporate automation and reporting, such as automated QA, can also help improve labeling efficiency by helping to prevent logical fallacies, expedite training for data labelers, and ensure a consistent measure of annotation quality. This also helps reduce the amount of manual QA time required by clients, as well as the annotation provider.

Few-click annotation is another example, which uses machine learning to increase accuracy and reduce labeling time. With few-click annotation, the time it would take a human to annotate several points can be reduced down from two minutes to a few seconds. This combination of machine learning and the support of a human, who does a few clicks, produces a level of labeling precision previously not possible with human effort alone.

The human in the loop is not going away in the AI supply chain. However, more data annotation providers are also using pre and post-processing technologies to support humans training AI. In pre-processing, machine learning is used to convert raw data into clean datasets, using a script. This does not replace or reduce data labeling, but it can help improve the quality of the annotations and the labeling process.

There are no shortcuts to train AI, but a data annotation provider can help expedite the labeling process, by leveraging in-house technology platforms, and acting as an extension of your team, to close the loop between data scientists and data labelers.