The combination of HCI and edge computing will give AI the tools to evolve to the next level, enabling smarter and faster decision making for organizations.

In 2019, artificial intelligence (AI), machine learning (ML), and advanced analytics had a significant impact on the way IT operates. While AI offers seemingly endless possibilities to advance IT and our daily lives, we are beginning to see limitations in technology that were not built to incorporate AI into their processes.

What can be done to ensure that today’s businesses and IT professionals can enjoy AI’s full potential? Enter hyperconverged infrastructure (HCI), which has gained rapid notoriety for its long list of undeniable benefits such as its simplicity, high availability, and scalability. HCI is known for requiring simpler management, utilizing less rack space and power, fewer overall vendors, and an easy transition to commodity servers.

See also: The Edge is Now the Center of the Action

Likewise, edge computing is garnering tremendous momentum across IT and business functions, as it provides the ability to process and store data at the edge of the network where the data is actually being created. This, in turn, enables greater performance, easier management, heightened security, and a dramatic reduction in associated costs.

Together, the combination of edge computing and HCI is the ideal foundation for overcoming legacy technology limitations and bringing AI to the next level.

Understanding Edge Computing

Today’s world is increasingly data-driven, and that data is being created outside of the traditional data center. As mentioned, edge computing is the processing of data outside of the traditional data center, at the edge of a network, on-site where it is created. With only a small hardware footprint, infrastructure at the edge collects, processes, and reduces vast quantities of data that can then also be moved to a centralized data center or the cloud. The benefit here is that data is processed and reacted upon at the point of creation, instead of having to send it across long routes before it can be utilized. Edge computing can be key in numerous use cases, from self-driving cars, grocery stores, and quick-service restaurants, to industrial settings such as energy plants and mines.

However, all the data that is being captured at the edge is not yet being used as effectively as it could be. AI, while out of its infancy, is still a rather young technology that requires an extraordinary amount of resources in order to train its models. For training purposes, edge computing is best suited to allow information and telemetry to flow into the cloud for deep analysis, and models that are trained in the cloud should then be deployed back to the edge. The best resources for model creation will always be in the cloud or data center.

A great example is Cerebras, a next-generation silicon chip company that just launched its innovative “Wafer Scale Engine,” which is engineered specifically for the training of AI models. The new chip is unbelievably fast, with 1.2 trillion transistors and 400,000 processing cores. However, all of this consumes an amount of power measured in tens of kilowatts, making it unviable for the majority of edge deployments.

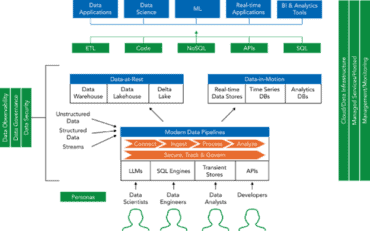

Consolidating edge computing workloads using HCI allows organizations to create and optimize utilization of what is known as data lakes. Once data is in a data lake, it’s available to all applications for analysis, and machine learning can offer new insights leveraging shared data from various devices and applications.

The ease of use that HCI enables by uniting servers, storage, and networking into one box eliminates many of the difficulties of configuration and networking that can accompany edge computing. Moreover, platforms that deliver integrated management for hundreds or thousands of edge devices in different geographical locations, all with different types of networks and interfaces, allow for much of the complexity to be avoided, significantly reducing operational expenses.

The Advantages of HCI and Edge Computing for AI

With the continued introduction of consumer advancements such as smart home devices, wearable technology, and self-driving cars, AI is growing up and becoming more and more common in everyday life, no longer relegated to science fiction alone. It is therefore not terribly surprising that it is set to experience significant growth, with an estimated 80% of devices having some sort of AI feature by 2020.

Historically, AI technology has relied on data stored in the cloud. However, this can cause latency as that data has to travel to data centers and then back to the smart connected device (i.e., Internet of Things, or IoT). This can be problematic across many use cases, such as self-driving cars, which cannot wait for the roundtrip of data to know when to brake or how fast to travel.

The benefit of edge computing for AI is that the required data would live local to the device, thereby reducing latency. Having the data reside on the edge of the device’s network also allows for new data to be stored, accessed, and then uploaded to the cloud when required. This feature greatly benefits AI devices, such as smartphones and self-driving cars, which don’t always have access to the cloud, due to network availability or bandwidth, but are reliant on data processing to make decisions.

Another benefit that the combination of HCI and edge computing brings to AI is reduced form factors. HCI allows technology to operate within a smaller hardware design. In fact, some organizations are set to introduce highly available HCI edge compute clusters, which are no bigger than a cup of coffee.

HCI must embrace and include edge computing, since doing so provides benefits important to the advancement of AI, as well as allows for the technology to operate without a great deal of human involvement. This allows AI to optimize its machine learning feature best and increase its smart decision-making efficiency.

While the cloud has provided AI the platform it needed to grow to the level of being available on nearly every technological device, the combination of HCI and edge computing will give AI the tools needed to evolve to the next level, with smarter and faster decision making for organizations.