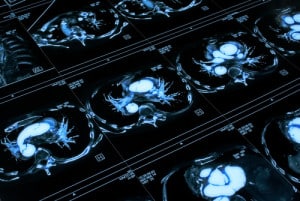

Will computers “see” better than radiologists?

Deep learning is finding a valuable place in autonomous vehicles, but according to a new report from Signify Research, the practice could create a $300 million medical imaging market by 2021.

Signify Research bills itself as an “independent supplier of market intelligence and consultancy to the global healthcare technology industry.”

While deep learning is predicted to grow rapidly, investment in other image analysis techniques will likely be flat over the next few years. In 2016, spending on medical image analysis solutions was less than $300 million, and is slated to more than double by 2021. In other words, deep learning alone will get more investment for medical imaging by 2021 than the entire analysis industry spent in 2016. Why are hospitals looking to get a boost from deep learning?

The issue: specialists are overworked

In many countries, there simply aren’t enough radiologists to possibly examine and make smart decisions about the images they receive throughout the day. According to Radiology Business, and an analysis conducted by doctors at the Mayo Clinic, medical imaging saw “explosive growth” in the mid-2000s. Between 1999 and 2010, the number of images from a CT scan increased from 82 to 679 images per exam. MRIs jumped from 164 to 570 images.

Simply put, radiologists are overworked — and that results in medical error. The Mayo Clinic report authors wrote: “As the workload continues to increase, there is concern that the quality of the health care delivered by the radiologist will decline in the form of increased detection errors as a result of increased fatigue and stress.”

If radiologists and other specialists who must look at a great deal of images throughout the day to make their diagnoses can have any kind of assistance in their effort, it should not only increase their pace but also minimize those errors. Deep learning could take this place—not to make radiologists obsolete, but rather to aid them make faster, better decisions in the same way they’ve always done.

The solution: from detection to differential diagnosis

The report from Signify outlines four layers of image analysis software: computer-aided detection, quantitative imaging, decision support tools, and computer-aided diagnosis.

Computer-aided detection will be the most common option for the near future due to its relative ease of implementation. These systems can help alert radiologists to suspicious lesions or other areas that should require a deeper look, which will make analysis faster and also reduce the risk of a stressed-out radiologist missing an important detail. The technology behind these systems is already quite mature, and investment will be heavy over the next few years.

Quantitative imaging tools help specialists segment, visualize, and quantify what they’re seeing. This can include automatic measurements of anatomical features—yet another way to help specialists streamline their processes and reduce the potential for error. Again, these won’t help a radiologist make their final determination, but simplifies that decision-making process itself.

Decision support tools merge these two areas together to help create automated workflow tools for radiologists. By giving them specific guidelines and goals on top of the deep learning-enabled analysis, these systems can keep them on-task and ensure consistency in the diagnostic strategy.

And finally, computer-aided diagnostic tools combine all the aforementioned tools to deliver diagnosis options backed by probabilities. The systems won’t make the final determination, but if a radiologist is told by a deep learning algorithm that a certain diagnosis has a 90 percent probability of being correct, they can focus on confirming that diagnosis rather than wasting time on others.

This is all part of an evolution, Signify Research says, “from a largely descriptive field to a more quantitative discipline.”

The next few years will be blurry

Despite their predictions about the growth in deep learning and medical imaging, the report also recognizes a number of issues. There’s only a few software solutions available and not much evidence as to how they will deal with variations in patient demographics, imaging protocols, and image artifacts. Computer-aided detection systems built using machine learning also didn’t perform very well in hospitals, leading to skepticism among radiologists.

Hospitals will also be wary of the legal implications of deep learning’s “black box” functionality. How do they trace where a particular result came from—the radiologist, or the algorithm? Who is to “blame” when things go wrong?

Simon Harris, a principal analyst at Signify Research concluded by saying that deep learning’s impact on hospitals “should not be under-estimated”: “It’s more a question of when, not if, machine learning will be routinely used in imaging diagnosis.”

Perhaps hospitals are hoping to see how deep learning performs in other critical spaces, like automated vehicles, before accepting the inevitable deep learning-powered doctor’s visit.