The Conversational AI application pattern is a significant evolution in how applications are experienced and in how they are built and deployed.

Humans, doing the everyday things that we as humans do, interact with agents all the time. Most of us have used real estate agents when we have bought or sold a house, and many of us rely on insurance agents to help us navigate the world of home or car liability. Today, the agents we deal with are almost always humans, but as has been widely predicted, the emergence of Generative AI will likely lead to agents (not humans) powered by AI being the “guiding intelligence [that] achieves a result” for many of our currently human-mediated everyday interactions.

Admittedly, this shouldn’t come as a large surprise. Automation is already being used today for many straightforward workflows. An auction aide that makes intelligent bids for us is an example of an extant automated agent. What I’d like to focus on in this conversation is not only that Generative AI will dramatically increase the prevalence of automated agents, but exactly how those agents will drive new application architecture design patterns, and the implications for application delivery.

Two significant developments enabled by Generative AI are (a) the ability to have a conversational interface to our interactions with digital systems and (b) the ability to autonomously decompose a high-level task into subtasks, including the capacity to execute those subtasks using APIs for digital workflows. The first point—the conversational interface capability—is now well understood and accepted: over 67% of Americans have used OpenAI’s ChatGPT, and there are approximately 35 million regular North American users of ChatGPT, the most widely adopted GenAI chatbot. However, the second development is a less well-known but emerging trend; specifically, GenAI systems have spawned frameworks, such as AutoGPT and BabyAGI, that have demonstrated the ability to do high-level task planning and decomposition. We believe the integration of these two notable developments—an intelligent task planner with a conversational interface—will give rise to a new class of applications—Conversation AI Apps—using an AI Orchestrator to coordinate the application’s internal activities.

A deeper look at Conversational AI applications

The core value proposition of Conversational AI applications will result in a quantum jump in the application user experience. Today’s user-experience pattern—one where each individual step in a digital workflow must be identified and executed by a human who is thereafter additionally responsible for directing and choreographing the interactions across the steps in the workflow—will be disrupted. The user experience will instead be one where the human specifies the end state or goal—the outcome—that is to be achieved and any constraints, all while using a conversational interface as is appropriate. The job of decomposing the high-level workflow into subtasks, executing those subtasks, and directing the detailed subtask interactions will be delegated to an AI component we refer to as the AI Orchestrator.

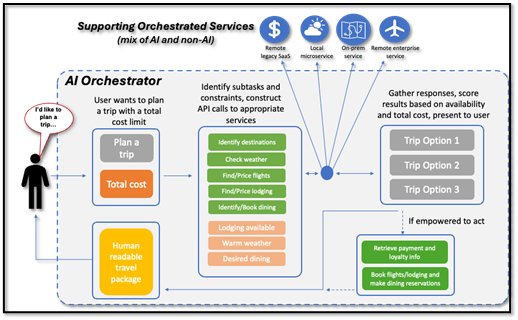

An example of a Conversational AI Application and the architectural pattern used is shown in the figure below, using travel planning as the sample scenario. In this not-so-future story, the user simply supplies a statement of intent or outcome: “I want to travel someplace warm next weekend, with good Chinese food, for less than $1000.” The AI Orchestrator takes a role analogous to a human travel agent, (a) identifying the requisite subtasks, (b) gathering the information from a variety of knowledge sources, (c) collating the results, and (d) filtering out any options that do not meet the high-level constraints. If the AI Orchestrator is further empowered to take actions on behalf of the human client, it can also follow a similar process to execute the transaction—retrieving payment and travel loyalty information, making payments, and making restaurant reservations. This workflow is visually depicted below:

The details of the workflow may change, but the key message is that the AI Orchestrator, not the human, is responsible for identifying subtasks and coordinating the workflow. The human client’s interface is intent-driven and conversational—“I want to travel…”. Not only is the human not forced into an externally designed workflow that may not be intuitive, but the user is also not required to specify all of the “how” level subtasks, such as looking at the weather, finding restaurants, and checking which destinations have both airfare and lodging that meet budget constraints.

From the perspective of the application consumer, this is a transformative change in user experience. The complexity, as measured by time and human effort, is greatly reduced while simultaneously improving the quality of the outcome relative to what a human would typically achieve. Note this is not just a theoretical possibility—in our conversations with CTOs and CIOs across the world, enterprises are already planning to roll out applications following this pattern in the next 12 months. In fact, Microsoft recently announced a conversational AI app specifically targeting travel use cases.

If we now consider how this automated workflow is architecturally executed, we can see that the Conversational AI application pattern represents a significant evolution not just in how applications are experienced but also in how applications are built and deployed. At the “core” of the architecture is the AI Orchestrator that performs the aforementioned roles of planner, choreographer, organizer, and judge—but the Orchestrator will rely on a variety of supporting services to gather the data needed and execute required actions.

These supporting services need not exist in the Orchestrator’s local environment (e.g., in the same Kubernetes cluster). In fact, these services will often be located in locations other than the Orchestrator’s due to concerns around data sensitivity, regulatory compliance, or partner business constraints. In other cases, where the supporting services may not represent a core competency or value proposition of the enterprise that owns the AI app, the service may simply be a black box, abstracted behind a SaaS interface.

The underlying premise—that “a service can be anywhere”—is not unique to the design of Conversational AI apps, but this class of apps does accelerate the pre-existing evolutionary trend. The end result is a marked shift from the past, where the collective portfolio of an enterprise’s applications spanned multiple public clouds and on-prem environments; now, each application itself is a hybrid, multi-cloud deployment in its own right.

Contextualizing this idea within the travel example, consider that the application owner may want to keep some core “crown-jewel” intellectual property/capabilities of the application (e.g., an LLM fine-tuned for travel sub-task decomposition) “on-prem,” but allow other application capabilities more related to foundational services (e.g., a database with customer account and personalization information) to be deployed in a public cloud instance. And still other capabilities, often those that are required for the application but not core competencies of the application owner (e.g., weather forecasts and payment services) are likely to be consumed as SaaS services, but accessed as if they were architecturally “inside” the application even though the API calls are made to external APIs.

In short, what the user perceives as “one” single application will now be likely to include software components running in multiple disparate environments—an enterprise’s on-prem infrastructure, enterprise-owned infrastructure deployed in public clouds, and external SaaS services deployed in locations unknown/abstracted away. Thus, as compared to more traditional apps, this results in an architecture that is more distributed, spanning across on-prem and multiple public clouds, leveraging both AI and traditional services, and using consumption form factors that may be containers, virtual machines, or SaaS.

See also: Conversational AI: Improved Service at Lower Cost

The role of APIs in Conversational AI apps

A second key theme for application developers is the increased primacy of APIs in the conversational AI pattern, which arises as a result of the upleveling of how humans interact with the application. As the interaction model transitions away from the granularity of app-per-subtask, toward the granularity of a high-level intent or desired outcome level of abstraction, the workflows at the subtask level, that would typically have been human controlled and mediated via a GUI, will instead be managed by an automated AI agent that invokes APIs directly.

The implications of this uber-distributed, agent-orchestrated application model are far-reaching for operations teams as well in the domains of both deployment and security. To begin with, the distributed nature of the application implies that no single infrastructure provider will be able to provide holistic observability for the overall app. This will result in next-level complexity challenges in the areas of debuggability, performance management, and OpEx cost controls. Operations teams will need solutions that operate consistently and seamlessly across on-prem, public cloud, and SaaS environments. Another key implication stemming from the application’s need to securely transfer data and make API calls across these disparate network environments will be an increased emphasis on Multi-Cloud Networking (MCN) solutions.

Security teams will have to deal with multiple challenges as well. One overarching challenge will be the analog of the deployment teams’ operational concerns: how to apply consistent security controls and governance policies across the multiple diverse environments used to compose a single logical application. A second emergent class of concerns for application security practitioners will be a need to place much greater emphasis on the threat surfaces that exist “inside” the application. In the past, an application’s core logic resided within a single environment, such as a single Kubernetes namespace or a single VPC, so compromising the “internals” of the app required sophisticated attack vectors that attacked the supply chain or compromised an internal microservice.

Going forward, because the “internal” components of an application will themselves be distributed, likely using different infrastructures, the result will be that the internal attack surfaces are both more directly accessible to attackers and also built using a greater diversity of technology infrastructures—in combination providing a larger and more easily accessed threat surface for attackers to exploit. A third challenge will be dealing with the evolution of bot protection in a future world where AI-powered agents using APIs directly are pervasive and are, in fact, the most common legitimate clients of APIs. In that environment, the bot challenge will evolve from discerning “humans” vs. “bots,” leveraging human-facing browsers, towards technologies that can distinguish “good” vs. “bad” automated agents based on their observed AI behavior patterns.

A final word on the impact of AI

The advent of Generative AI is having and will continue to have transformative impacts across multiple facets of the technology space. While we are unable to foresee all of these impacts today, one transformational change that appears imminent is in the area of how applications are experienced. GenAI will relieve humans from the legacy interaction pattern of spelling out each step in a complex workflow forced to live within the constraints of highly structured and opinionated GUIs. Instead, applications will be empowered to take a more human-first approach, where outcomes and intent are specified alongside constraints in natural language. Recent AI advances are ready to supply the requisite foundational technology today, and the compelling improvement in user experience will provide strong demand. Therefore, technologists across the board—application developers, operations teams, and security teams—must be prepared for the new challenges this new architectural pattern will bring with it.