MemSQL uses a trinity of Apache Kafka, Apache Spark, and its own platform to build real-time data pipelines. Here’s how:

The global in-memory computing market is expected to grow from $5.58 billion this year to $23.15 billion by 2020—a compound annual growth rate of 32.9 percent, according to research firm Markets and Markets.

In-memory has become popular because it is scores faster than the old method of reading memory from disks. With rapidly declining costs for RAM, in-memory technology has also become affordable during a time when data growth is exploding, including on social media, from clickstreams and online transactions, and from IoT devices usually embedded with a variety of smart sensors.

There’s also a solid business case for using real-time data. Transaction data, in particular, can lose a lot of its value if it takes too long to analyze. Consider credit card fraud. A thief can go an hours-long shopping spree, and it’s usually covered by the credit company and not the customer. Banks and other financial services firms are also at risk from fraudulent or risky real-time transactions.

What’s too long for “real time” depends on the business case, but some applications require response times of less than a minute. Real-time marketing applications, for example, must immediately process clickstreams and data from customer-relationship management systems to present the “next best offer” for the buyer, or else produce an incentive—such as free shipping or a discount—to close an online sale. Other applications where real-time data plays a critical role include numerous smart grid applications; preventing accidents or costly repairs with industrial equipment; or monitoring ill patients.

In-Memory Pipelines With MemSQL

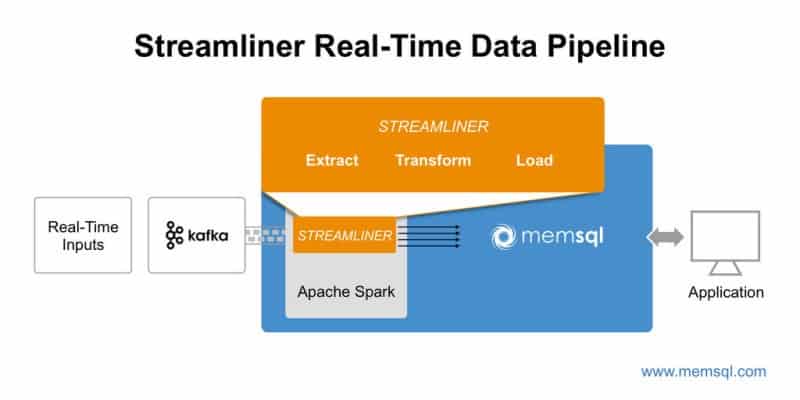

A recent webinar from MemSQL, “Building Real-Time Data Pipelines Through In-Memory Architectures,” showed a “trinity” deployment of Apache Kafka, Apache Spark, and MemSQL for real-time data analysis.

Anyone of course with enough know-how can use Kafka and Spark. But MemSQL, based in San Francisco, released in September MemSQL Streamliner, which is an open-source project that enables one-click deployment of Apache Spark. It also contains a web-based user interface for setting up a pipeline.

Here’s the basic architecture as described in the webinar:

For use cases, MemSQL cited clients such as Comcast, which delivers IP video to Apple, Microsoft, and Samsung devices, and wanted to monitor the health of streaming video data and respond immediately to unexpected latency issues. Zynga has also used MemSQL to provide real-time personalization for online games, and MemSQL also worked with Pinterest to create real-time dashboards for pins.

Another use case for in-memory processing is Shutterstock, an online digital media company that uses a rack of 16 MemSQL nodes, each with 256 GB of memory, to get real-time updates on CPU, disk, and RAM use, as well as network traffic, pictures uploaded and downloaded, and revenue per minute from online sales. Shutterstock has said that, when crunching data on site performance, “a delay even as short as five minutes isn’t acceptable.”

What’s Next for MemSQL

MemSQL ranked as a “visionary” in Gartner’s 2015 magic quadrant for operational database management systems, ranking near NuoDB and Fujitsu, but behind industry leaders such as SAP (a pioneer of in-memory through its HANA platform); Amazon Web Services; IBM; Oracle; and Microsoft. A “unique” feature of MemSQL, however, is that it can compile SQL to machine code, push it to distributed nodes, and allow that code to be re-used, according to Information Management, which quoted Gartner.

A 2015 report from Gartner stated that MemSQL’s strengths include tech support, innovation, and rich functionality (such as ACID compliance, support for geospatial data and Apache Spark). It is also easy to program and implement, Gartner stated.

Eric Frenkiel. CEO of MemSQL, said the company looks to expand with predictive analytics.

“I think the notion of building predictive applications is very exciting,” he said. “Because it’s taking the data scientists out of the back office and putting the model in the front of them.”

Want more? Check out our most-read content:

Frontiers in Artificial Intelligence for the IoT: White Paper

Real-Time Retail: Why Uniqlo Employees Use Handhelds

Value of Real-Time Data Is Blowing in the Wind

IoT Architectures for Edge Analytics

Liked this article? Share it with your colleagues!