The new systems will triple the capacity and double the storage and interconnect speed NOAA can access, allowing the agency to create both higher-resolution models as well as build more comprehensive global models.

The National Oceanic and Atmosphere Administration (NOAA), an arm of the U.S. Department of Commerce, has announced it will upgrade the supercomputer it relies on to generate weather forecasts to be able to process 12 petaflops of data once it becomes operational in 2022.

NOAA updates the core platform it relies on to analyze weather data once a decade. After an open competition, NOAA awarded CSRA LLC, an arm of the General Dynamics Information Technology company an eight-year contract that includes a two-year option to renew.

See also: Fastest Supercomputer Adopts Real-Time Analytics

Under the terms of that contract, CSRA will manage two Cray supercomputers that are at the core of the NOAA Weather and Climate Operational Supercomputing System. The primary system will be located on Manassas, Va, with a backup system located in Phoenix. Those systems replace both an older generation of Cray supercomputers as well as systems from Dell Technologies that NOAA currently relies on.

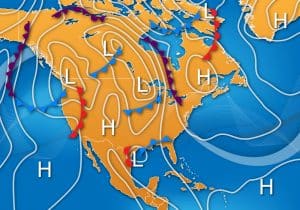

The new systems will triple the capacity and double the storage and interconnect speed NOAA can access, allowing the agency to create both higher-resolution models as well as build more comprehensive global models using larger ensembles, advanced physics, and improved data assimilation techniques created by the Earth Prediction Innovation Center (EPIC), an initiative via which weather researchers around the globe take advantage of cloud computing resources to collaborate via a Unified Forecast System community.

Once installed, NOAA will have a total of 40 petaflops of computing to process massive amounts of weather data thanks to three additional supercomputers in its research and development centers in West Virginia, Tennessee, Mississippi, and Colorado.

The analytics created by NOAA will then be shared with both universities and media outlets as well as a variety of commercial analytics applications that, for example, make use weather data in everything from mobile applications for consumers to global logistics applications relied on by shippers.

“Data now drives a multibillion-dollar weather industry,” says David Michaud, director of the Office of Central Processing for NOAA.

NOAA currently updates the weather forecasts it provides four times a day. However, the days when NOAA was the only source of weather analytics have gone by. IBM, for example, via its Weather Channel subsidiary also leverages supercomputers to provided analytics. Thanks to the rise of various Open Data initiatives it’s now simpler for governments and organizations to share weather data via application programming interfaces (APIs).

Of course, there are very different models that can be employed to forecast the weather. However, Michaud says that as more compute capacity becomes available it will become possible to predict, for example, how flash flooding may soon impact a much smaller area of geography.

As the debate over the effects and causes of climate change continue to swirl, more people than ever are paying attention to weather, including politicians. The challenge and the opportunity now is take advantage of additional compute horsepower to inform often heated debates with actual science. Only then can the rhetoric that currently dominates the debate over climate change one way or another finally be put to rest.