A giant telescope is undertaking a 10-year survey of the universe in a hunt for dark matter. The experiment’s success depends on real-time management of data and telemetry to ensure no disruptions in cosmic exploration.

Name of Organization: Large Synoptic Survey Telescope

Industry: Scientific research/government

Location: Cerro Pachón, Chile

Business Opportunity or Challenge Encountered

When selecting a platform for real-time analytics and data sharing, rarely does it get more complicated than the Large Synoptic Survey Telescope.

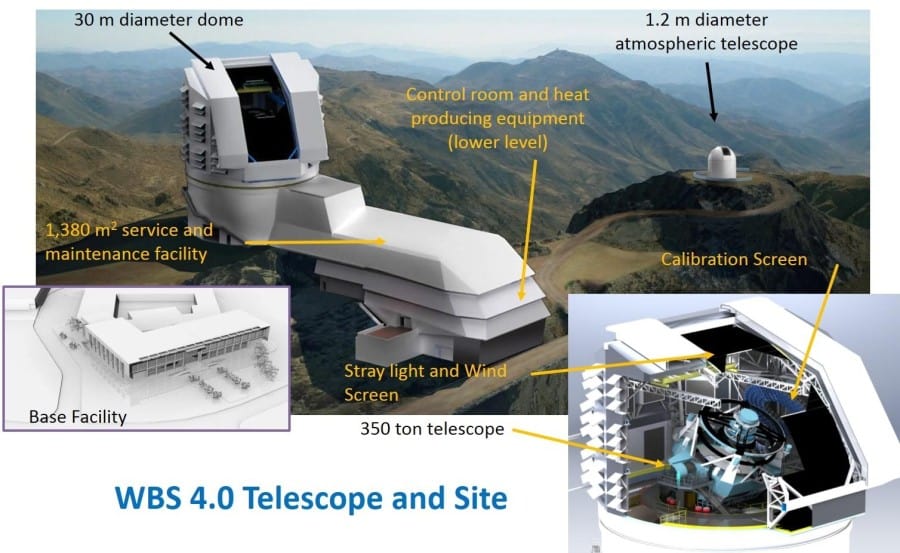

The LSST, which has been identified as an international scientific priority, will sit atop the Chilean mountain of Cerro Pachón and scan the heavens starting in 2020. More than eight meters long, the telescope will generate 30 terabytes of data night after night for more than 10 years. The challenge: peer into the outer reaches of the solar system and shed light on such phenomena as dark matter and dark energy, providing clues to the nature and origin of the universe.

Funded by the U.S. Department of Energy and the National Science Foundation, the LSST is a public-private partnership featuring an immense collaboration of countries, companies and universities—including more than 400 scientists and engineers.

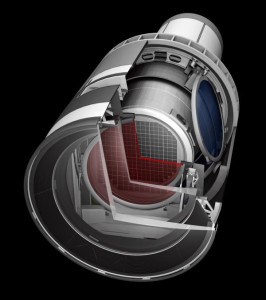

LSST also works in tandem with the world’s largest digital camera, weighing in at more than 6,000 pounds and snapping 3.2-gigapixel images every 20 seconds. Much of the data—even full-resolution images—will be available for download from the Internet as the LSST project will provide analysis tools for students and the public. That produces another challenge: how to manage all that data.

The Large Synoptic Survey Telescope is a revolutionary facility which will produce an unprecedented wide-field astronomical survey of the universe using an 8.4-meter ground-based telescope.

The data generated by the LSST will show scientists more of the universe than humanity has seen looking through all previous telescopes combined, providing 1,000 images of every part of the sky. But acquiring all that scientific information requires control and telemetry data that must be captured, monitored and analyzed in order to reactively adjust and control the telescope in a 24/7 operation.

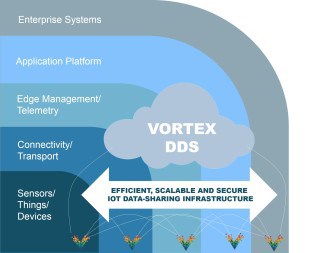

Vortex is based on the DDS open standard and is a crucial enabler for systems that must reliably and securely deliver high volumes of data with stringent end-to-end QoS.

How This Business Opportunity or Challenge Was Met

To manage the large volumes of data generated by the project, the consortium adopted a data-sharing platform designed to control, monitor and regulate the data interfaces, ensuring the right data gets to the right place in real-time within the telescope facility. The solution, PrismTech Vortex, employs data-sharing technology based on the Data Distribution Service (DDS) standard developed by the Object Management Group (OMG). DDS allows for high efficiency and productivity,, as it decouples applications from the data, fully handling state management and alignment. The data becomes accessible and updatable by any application or system requiring it.

Without DDS, the developed applications shoulder the tasks of message interpretation and state management. For LSST, it is critical that the applications do not handle the data. For a system this size, this effort is non-trivial and would require a development team three times larger to implement the intended application feature set.

Information sharing is peer-to-peer by publishing and subscribing applications, which interact with the global data space through a specified application programming interface. The API enables a system development discipline through which application interaction is managed through a common understanding of the data.

The LSST’s 10-year survey of the universe depends upon the real-time management of all telemetric data regarding the conditions under which the survey measurements are taken. In support of this effort, an auxiliary telescope is also being constructed and will monitor atmospheric variables in the primary telescope’s field of view. The importance of comprehensively capturing measurement conditions is at the heart of the program’s success. Signal-to-noise ratio for this experiment is extremely small. The possibility of winnowing information from the noise diminishes greatly unless the measurement conditions are properly accounted for, collated and correlated.

The applications that control and monitor LSST require a real-time view of the data generated from the thousands upon thousands of applicable parameters. With this data, they perform a range of complex functions, most crucial of which may be the adjustment and calibration of the telescope’s optical surface. Additionally, the automated nature of the survey introduces complexity, which made it necessary to develop special scheduling software.

Measurable/Quantifiable and “Soft” Benefits from This Initiative

A minimum of 96 percent uptime over a 10-year period is required to ensure that risks to the LSST project are minimized and no corruption of the experiment data during this timeframe. The data-sharing platform enables real-time monitoring and predictive capabilities, which will ensure the survey does not suffer disruptions in its cosmic explorations.

Another benefit is the data-sharing platform’s enablement of real-time analytics. Specifically, it complements the use of complex event processing (CEP) engines to identify potential disruptions and respond to them as quickly as possible. The DDS-based solution provides a stream abstraction for CEP that is safe, high performance, highly available, decoupled in space/time, and dynamically discoverable.

(Source: PrismTech)

Want more? Check out our most-read content:

Research from Gartner: Real-Time Analytics with the Internet of Things

Goodbye Don Draper, Hello Big Data: An EMA Report on Modern Analytics

Becoming an ‘Always On’ Smart Business

Frontiers in Artificial Intelligence for the IoT: White Paper

Liked this article? Share it with your colleagues using the links below!