Process + Data are both critical for innovation and transformation. The potential and the power of Data insights are realized in the digitized and automated processes.

This is Part II of a 2-part article on Process + Data. Part I described the evolution of intelligent DBMSsand intelligent BPMSs. Artificial Intelligence, as well as various digital technologies, have had a tremendous impact on both trends. iBPMSs also support process and work automation – especially through Robotic Process Automation.

Part I highlighted the lack of balance between DBMSs – especially advanced NoSQL databases – and BPMSs in enterprise architectures. Through AI and other digital technologies, they have both become intelligent. Furthermore, we are now witnessing a plethora of approaches for No Code/Low Code development in both domains. There are now Citizen Developers and Citizen Data Scientists.

However, the DBMS layer with multiple Relational and NoSQL database is ubiquitous in IT Infrastructures and Enterprise Architectures. Data is the new crude oil!

The BPMS layer? Not so much.

To make the most of the synergy between Process and Data, the enterprise in-motion needs to focus on the obvious: the core business applications and solutions that drive business value. To re-iterate the obvious: it is not about technology!

Valuestreams (aka Valuechains)

The data approach is at its core a bottom-up approach focusing on persistent data. Important and critical. But still, bottom-up technology-driven. Here we will elucidate three robust use cases that take a different approach that is much more supportive of Autonomic Enterprise-In-Motion.

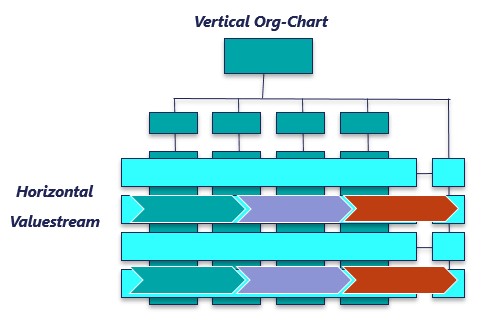

The process-driven application approach is very different. A fundamental assumption in the process-driven approach is that a business is a collection of Valuestreams. Businesses think in terms of objectives and milestones or stages to achieve these objectives in the context of Valuestreams. Most organizations are still organized vertically, and each business unit focuses on their measurable objectives.

Silos in business units, various applications, and trading partners are pervasive

Valuestreams go horizontally across business units, different legacy applications, and trading partners attempting to optimize customer experiences – to realize and operationalize value. The Cultural change in the Enterprise-In-Motion needs to capture, digitize, and automate the valuestream for optimized visibility and control.

The Valuestream Digitization and Automation are the central pillars for Digital Transformation

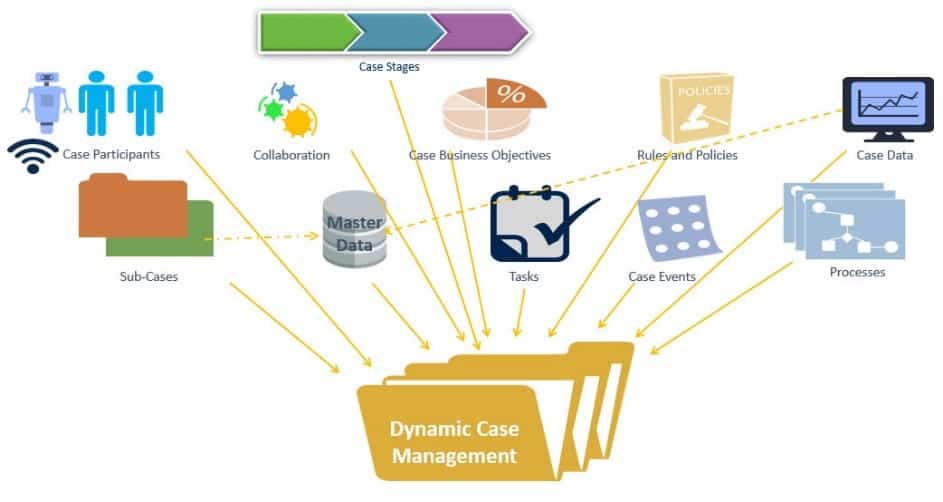

Organizationally, the culture needs to encourage empowered ownership of the valuestream – across silos. Digital Process Automation achieves the digitization and automation of valuestreams through Dynamic Case Management (DCM).

Typically, these are siloed, and the communication is done through manual hand-offs. It is interesting to note that digital technologies and even digital transformation practices have had little impact on vertically organized siloed organizations. The organization hierarchy has persisted. Valuestreams go horizontally often with an empowered owner for its operational excellence. If the valuestreams are not optimized through DPA, there will be considerable waste and inefficiencies.

The power of the digitized & automated Valuestreams is the core enabler of the three use cases

Process + Master Data

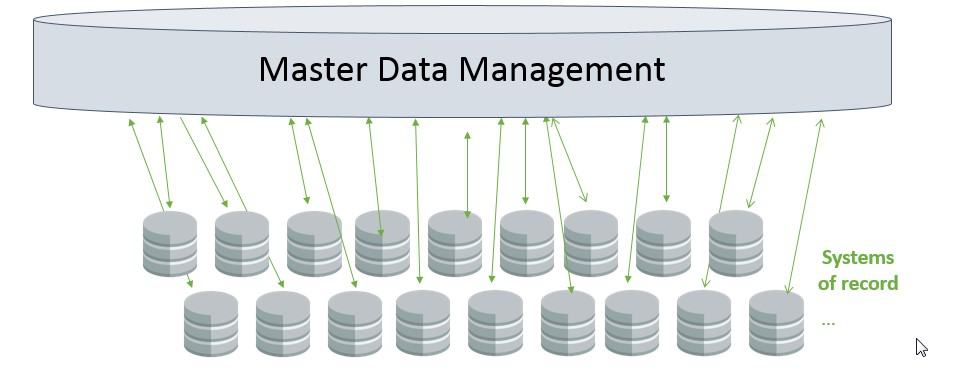

According to the MDM Institute: Master Data Management (MDM) is the authoritative, reliable foundation for data used across many applications and constituencies with the goal to provide a single view of the truth no matter where it lies.

The siloes mentioned above – in organizations, business units, and applications that are owned by them – are the main reason there are inconsistencies in the information about the same entity: customer, supplier, product, or other. Master Data addresses several pain points. Here are a few examples:

- Inconsistencies and poor data quality results in poor, erroneous, and even risky decisions. The automated dynamic case executions are as good as the consistency of the data.

- For Customers, Products, Pricing, Partners, Suppliers, Services, and other Master Data shared across applications, often different siloed systems contain contradictory information about the same entity (e.g., wrong marital status). There is a lot of waste attempting to keep heterogeneous data consistent and of high quality.

- Mergers and Acquisitions also create data consistency challenges – to have a single version of the truth about a customer or offering across the organization

Here are some typical examples:

- Customer address is not updated consistently across all the databases or applications that store the address.

- The price of a product is not consistent and depends on the channel (retail, Web, mobile app, etc.)

- The Supplier list, availability, and list of parts is inconsistent across various ERP systems

There are many more.

Master Data is needed to address data quality, data consistency, data sourcing, data accuracy, data integrity, data replication, and data completeness.

As noted above, an enterprise is the aggregate of its Valuestreams. These valuestreams executions are only as good as the consistency of the data. The Computer Science expression “Garbage In – Garbage Out” (GIGO) is very much applicable here. In fact, data inconsistencies will sooner or later impact the customer experience resulting in customer dissatisfaction: lower Net Promoter Scores (NPS), higher ratio of detractors!

The Bottom-Up MDM Approach

Often organizations attempt to address the challenges of Master Data through Master Data Management tools and systems. This could end up being “big bang” master data projects, with expensive tools. Some organizations have established MDM centers of excellence for governance. The technology and data consistencies are formidable and important to address, including data cleansing, addressing missing data, data consistency, ETL, and data integration. The danger is the enormous effort required to normalize master data without prioritizing through the business objectives. One common problem is that initiatives attempting to resolve master data challenges often do so in a silo. The MDM itself becomes yet another layer of software that needs to be managed. If data copying and replication is used, this also creates additional overhead and potential inconsistencies.

However, the more serious problem is the lack of focus and precise justification in the creation and management of specific master data. For instance, the aggregate number of fields or attributes about a Customer from various systems of records could be in the 100s. The most critical customer Valuestreams will typically need a very small subset of the available fields or attributes. The rest will rarely – if ever – be used. While the reasoning of the MDM system might make sense, this bottom-up approach could be sub-optimal.

Top-Down Valuestream Approach

A more optimal approach is to treat MDM challenges as part of overall continuous improvement initiatives, especially through end-to-end dynamic case management solutions that connect silos that touch and manipulate master data. The Enterprise-In-Motion is an aggregate of Valuestreams. MDM is about making the Valuestreams run as well as they can. Each of these Valuestreams have specific business objectives – for instance, reducing cost, improving the NPS, or generating revenue. The core to this approach is a Dynamic Case Management (DCM) -enabled layer that wraps and modernizes legacy systems. As noted in Part I, DCM is a key capability in DPA – in addition to Robotic Automation, AI, and other Digital Technologies.

The top-down approach focuses only on those fields or attributes that are needed for specific Valuestreams that are optimized, digitized, and automated through DPA.

This “top-down” approach prioritizes transformational projects with MDM improvements and balances risk with business value. These technical database issues must be addressed, but with a revamped approach to priorities.

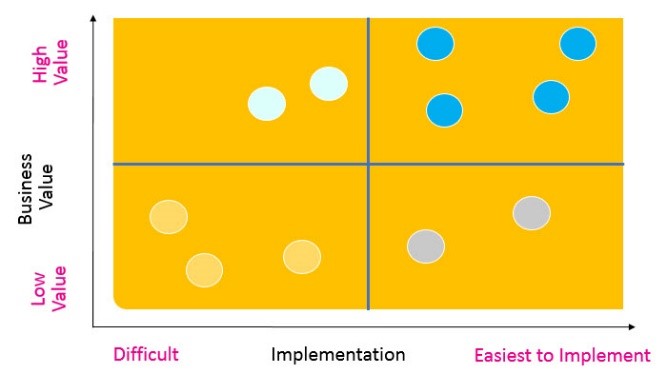

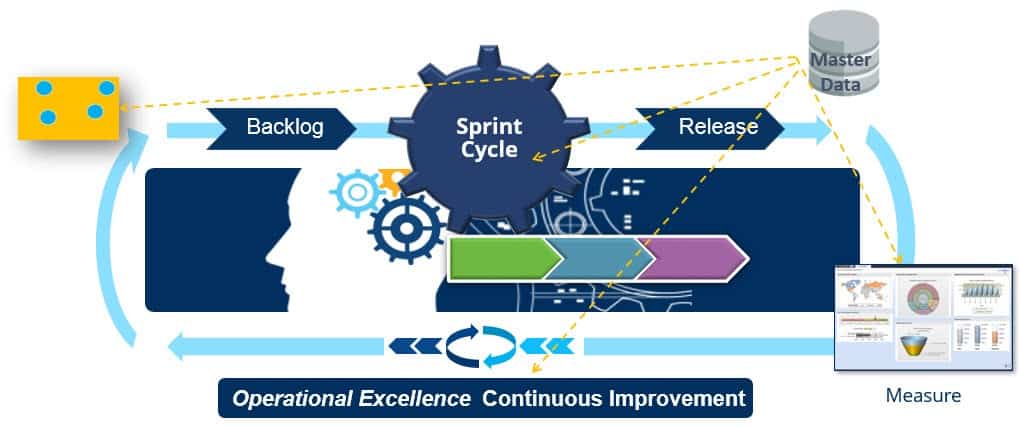

Think Big but Start Small

In the Enterprise-In-Motion, solutions to master data issues are driven by “Think Big…But Start Small” governance. The approach is to realize quick wins, build or achieve the needed master data rigor, and then expand with additional transformational solutions that include Master Data. In other words, with the iterative approach, the aggregate master is built piecemeal instead of a Big Bang comprehensive Master Data and then realization of projects for solutions for digital transformation. The Master Data governance and implementation can be embedded in the DNA of the process automation methodologies and lead to the prioritization of data sources and the optimization needed to manage data within the DPA layer. The objective is to balance ease of Master Data with business value for specific prioritized projects.

In Design Thinking Methodology, prioritization is critical for the backlog of Valuestream projects. These prioritizations systematically rank projects balancing ease of implementation with business value. Here are some potentially measurable dimensions that could impact the prioritization:

- Complexity of consolidating the master data fields for the project

- Impact of poor master data quality on end-to-end cases for the project

- The application of the master data fields in the high business impact project

- Availability of APIs to access the master data field values for the project

The prioritized slivers reflecting Master Data support are fed to the agile methodology. The methodology should help you continuously monitor and measure the business objectives. The Master Data gets optimized iteratively throughout the slivers. The innovative projects fed by the Design Thinking prioritizations are continuously measured and monitored. There are three types of iterations in the Top-Down approach:

- Iterations in the backlog of the projects – prioritized by business value as well as Master Data availability dimensions

- Iterations while building the DPA solution while leveraging Master Data

- Post-production, it is also important to compare the targeted vs. achieved objectives for further improvements.

Thus, the Top-Down approach incrementally builds up the Master Data, while continuously delivering high business value projects and improving them.

Digital Transformation: IoT & Blockchain

The Valuestream process-driven top-down approach is an enabler for Digital Transformation technology value propositions. Two of these technologies that are most critical for the Enterprise-In-Motion are IoT and Blockchain.

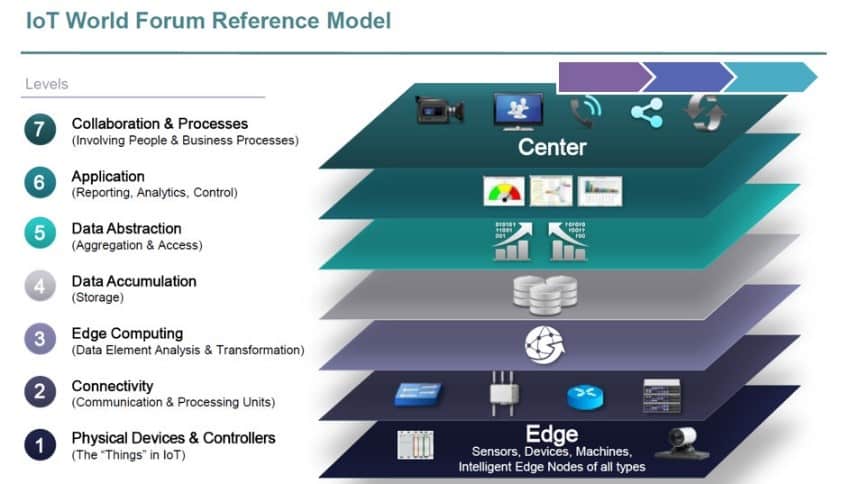

IoT is about connectivity of increasingly intelligent devices through sensors and actuators. The foundation for connectivity, balancing range, and power supply considerations is, of course, critical. There are several components in the overall stack and multi-tier architectures of IoT. The lowest levels include physical devices and systems. It is precisely this cyber-physicalconnectivity that is laying the foundation of the IoT era.Other layers include the data accumulation and analysis layers. The IoT connected devices generate an enormous amount of data: Big Data is becoming Thing Data! Some of this data – often the bulk of it – is being processed at the edges.

There are several reference architectures and reference models for IoT. IoT World Forum’s Reference Model puts collaboration and Business Processes at the top of the multi-level architecture for IoT.

This is significant and spot on. Success can be achieved top-down with concrete business objectives from the get-go. Top-down business solutions involve People, Connected Devices (aka IoT), trading Partners, and Enterprise Applications (aka Systems of Record): all collaborating and orchestrating their activities towards concrete and measurable Key Performance Indicators (KPIs). The collaborations are in the context of End-to-End Valuestreams (the operating word being Value), that are modeled, automated, and monitored through DPA for continuous improvement. DPA methodology, competency best practices, and technology are the engine that drives IoT success.

There are many applications of IoT that are driven by DPA Valuestreams. Digital Prescriptive Maintenance is the killer application for IoT. As illustrated here, it involves the orchestration of tasks whose participants include people – for example, Customer Service and Field Service – enterprise applications, AI for triaging the best actions, Warranty chain management, and of course connected devices and IoT. The end-to-end orchestration and automation are achieved through DPA.

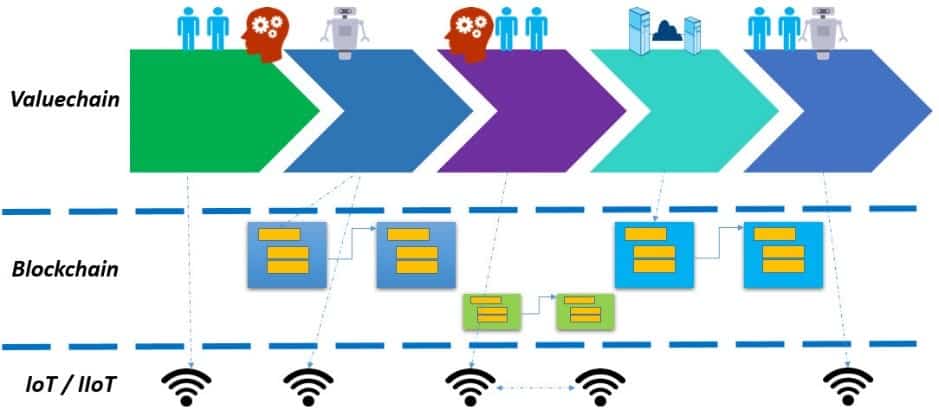

Blockchain is very much a revolution. It is the engine that empowers the emergence of the Internet of Value (IoV). IoV is an important phase in the evolution of the Internet. In the 1990s we started with the Internet of Information: the traditional Internet – the one we use every day searching for information. Next came the Internet of Things or connected devices that are becoming pervasive in consumer (e.g., Connected Homes), public sector (e.g., Smart Cities), and industrial applications (e.g., Smart Manufacturing). The road to IoT success runs through Digital Process Automation. Blockchain that is the underlying technology for cryptocurrencies is enabling the Internet of Value. The value can be digital currency. More importantly, the “value” can also be data that supports inter- and intra- organizational exchanges supporting business objectives.

Blockchain as a Decentralized & Distributed Database

Blockchain stores the ledger of transactions between various parties – in nodes that participate in validating the Blockchain. The ledger is distributed and replicated. Enterprises collaborating in B2B transactions can now share the transaction information via Blockchain. One potential application for extended (i.e., involving different trading partners) Enterprises-In-Motion is to treat the Blockchain as a shared database for their trade transactions and access the data as needed from within their enterprise applications. Thus, instead of trading partners replicating the data in their internal ERP or database systems, the Blockchain can serve as a Master Data for the inter-enterprise transactions! Blockchain technology is still very much in its infancy. We will pass through several “Troughs of Disillusionment” hype cycle phases before robust IoV solutions become pervasive.

Blockchain technology discussions also tend to be very much “bottom-up” – an interesting innovation looking for problems to solve. Like the success of IoT running through DPA, Blockchain needs to evolve to a Valuechain (aka Valuestream) approach – powered through DPA!

- Valuechain Orchestration Layer: At the

top, you have the end-to-end Valuechain that orchestrates and sequences tasks

involving people, automated devices, and enterprise applications (aka systems

of record) and trading partners. This layer is digitized and automated through

DPA.

- Blockchain Layer: The

decentralized distributed ledgers are managed at the middle Blockchain layer.

The Blockchains will execute either smart contract rules, exchange

cryptocurrencies, or both. The tasks or milestones of the end-to-end Valuechain

will leverage Blockchain in specific steps.

- IoT/IIoT Connected Device Layer: The lowest layer will be the IoT/IIoT connectivity layer. With Blockchain as well as IoT/IIoT edge computing, an added advantage is pushing the execution and the transactions to the edges. The overall Valuechain is at the top, with IoT/IIoT edge computing that can leverage Blockchain as needed.

Process + Data Conclusions

Process and Data are both critical for the Enterprise-In-Motion. However, the Process layer for automated Valuestreams – with robust DPA (current incarnation in the evolution of BPM) – is often missing in IT infrastructure and enterprise architectures. Part II of Process+Data covered three compelling use cases that clearly illustrate the power of a top-down business-driven Process approach. Even Master Data – that is at its core a database challenge – can be transformed and optimized through prioritizing the Valuestreams and building up the Master Data in the context of DPA iterations. The two other use cases pertain to the most compelling Digital Transformation technologies: IoT and Blockchain. For either, the road to success runs through DPA!

Process + Data are both critical for innovation and transformation. The potential and the power of Data insights are realized in the digitized and automated processes.

The Enterprise-In-Motion IT infrastructures and enterprise architectures, as well as the accompanying business value-focused methodologies, need DPA.