URPs are motivated by escalating business demands for smarter operational applications that can leverage up-to-the-second data to make faster and better decisions. Learn more about them here.

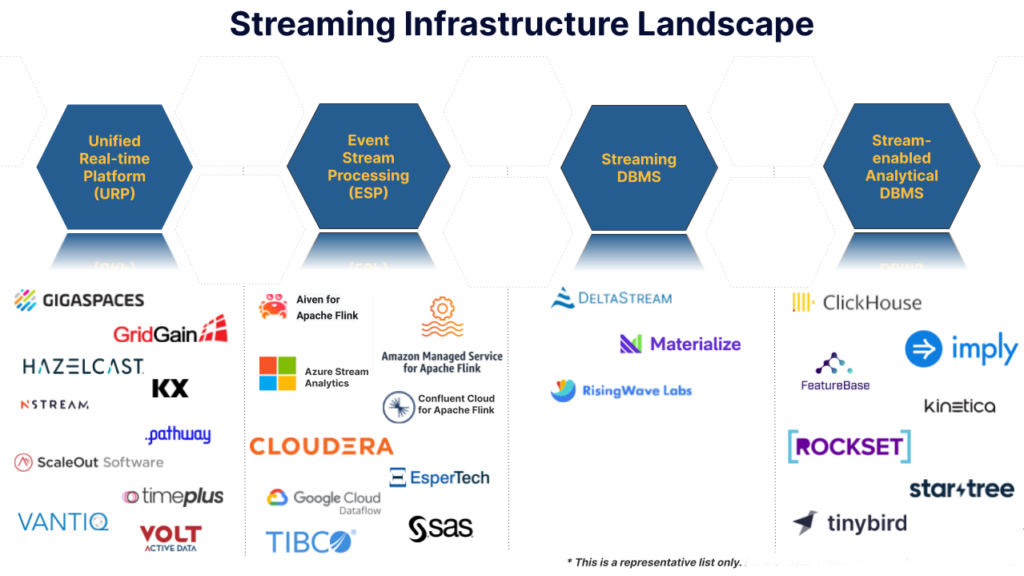

In our previous article, Unified Real-time Platforms, the authors collaborated to bring attention to an important, expanding category of software for event-based, real-time systems. In this research, we go deeper into the essential capabilities of a URP and how it differentiates from event stream processing (ESP) platforms, streaming DBMSs, and stream-enabled analytical DBMSs. The figure below shows a representative list of products that span these categories.

Figure 1: Streaming landscape products

This research document is part 1 of a two-part collection on our views regarding URPs. The second document, How to Select a Unified Real-time Platform, lays out evaluation criteria to help organizations select the URP that is best suited to their circumstances. Through our research, we hope to raise the awareness and profile of systems that make better real-time decision-making possible.

Where URPs are Relevant

URPs are motivated by escalating business demands for smarter operational applications that can leverage up-to-the-second data to make faster and better decisions. Streaming data from sensors, vehicles and other machines, cameras, mobile devices, news feeds, other external web sources, and transaction processing applications is proliferating. Real-time use cases create new revenue opportunities, help counter threats, and mitigate risks. For more discussion of where to use URPs and some examples of URP applications, see Four Kinds of Software to Process Streaming Data in Real Time.

In the past, organizations often had to settle for non-real-time batch or micro-batch approaches because achieving real-time latency was cost-prohibitive. The decreasing costs of processors and networks; the widespread adoption of streaming messaging middleware such as Kafka; and improvements in software, such as URPs, have combined to make real-time latency practical for a growing set of applications.

URP applications perform complex transformations and analytics on real-time streaming data to be used in conjunction with older data of record and reference data that can serve as contextual information including real-time features. They provide decision support to augment human intelligence or implement full decision automation with no humans in the decision process.

Generative AI has expanded the kinds of decision intelligence that can be applied. Gen AI can be used along with traditional predictive machine learning (ML) and symbolic AI techniques such as rule processing. The combination of decision techniques is “hybrid AI” (sometimes called composite AI). With URPs, we take it a step further into “real-time hybrid AI” because at least some of the data is current (real-time).

URPs address use cases that require either or both of the following:

- Low latency: the system must respond in milliseconds or a few seconds because the business value of the action decreases with delays.

- High volume: streams with many events per second and/or systems that continuously keep track of many entities because the volume of streaming data is typically much higher than that in traditional systems.

URPs are not relevant for offline data science or business intelligence applications that solely target non-real-time operational decisions.

URP Characteristics

By definition, all URPs provide three kinds of capabilities:

● Application enablement

URPs incorporate application platforms that let developers build and execute real-time, analytically-enhanced, operational business applications. These may (1) execute request-driven business transactions (such as payments, orders, or customer inquiries); or (2) monitor and manage large networks of devices (such as trucking fleets, cell phone networks, manufacturing lines, or supply chains).

URPs include build-time development tools and run-time infrastructure for backend (data-facing) business logic with real-time analytics that may include rule processing, predictive and generative ML/AI, including retrieval augmented generation (RAG) capable orchestration.

Application enablement critical capabilities include:

- Pull-based: Synchronous, user-defined APIs, request-driven operations, including queries, updates, and other business logic (i.e., they are not limited to DBMS DML commands) through interfaces, such as RESTful APIs. An example includes looking up the customer profile when an IVR receives a call.

- Push-based: Asynchronous, user-defined, event-driven operations on data received through adapters to Kafka, Kafka-like, or other messaging systems; or webhooks, WebSockets, Server-Sent Events (SSE) or other asynchronous communication mechanisms. An example includes analyzing a new log file that gets added to an Amazon S3 bucket for PII detection to meet regulatory compliance.

- Asynchronous batch operations (optional): Examples include batch payments or processing order shipments from a warehouse.

- Data integrity (optional) mechanisms: These operations include support for atomic inserts, updates, and deletes of data of record with strong or eventual consistency, isolation, durability and other transaction semantics. These tasks may be performed by a combination of the messaging system and the URP’s application and data management capabilities.

- Process orchestration (optional): Engines to manage multistep processes that are triggered by input requests or by detecting threats or opportunities in input event streams. An example includes the identification of anomalies in the input event stream that require repairs to be scheduled to handle a failing mining operation.

- End-user interface tools (optional): For the front-end of business applications, including reports, dashboards, or alerts on browsers or mobile or other devices.

● Stream processing

Applications that are built on URPs are situationally aware because they ingest one or more kinds of continuous (unbounded) streaming data and perform real-time analytics on those streams. A URP supports many, and in some cases all, of the streaming capabilities of an ESP platform, such as window-based functions and adjusting for out-of-order and late-arriving messages, depending on the URP.

Data comes to a predefined query (typically a directed acyclic graph (DAG) data flow), in contrast to the traditional data system approach, where a query comes to data after it has landed. The URP can implement this stream processing in an engine that is separate from the application enablement platform, but this is not required as long as the following stream processing critical capabilities are supported:

- Ingest high-volume streams from Kafka, Kafka-like, or other messaging systems, or other event-capable interface mechanisms.

- Process streaming data immediately after it arrives through user-defined operations that may include computing aggregates, joins, pattern detection, and general business logic.

- Sense conditions and trigger responses, using rules and other analytics on incoming streams to detect threats and opportunities and then triggering responses to be executed within the URP or by an external actor.

● Data Management

URPs store and provide access to real-time streaming data and other kinds of data using in-memory stores, persisted stores, or both. URP data stores can range from object stores and data grids to key-value stores, document stores, graph DBMSs, row-oriented transactional relational DBMSs, and column-optimized OLAP stores. A variety of indexing strategies to support high performance may be supported.

Data management critical capabilities include:

- Manage new streaming data in memory to support stateful operations. Usually, URPs offer durability by persisting data on a nonvolatile medium. Some RDBMS-based products choose a combination of row and column stores to achieve the tradeoff of performance and durability between in-memory and on-disk stores.

- Store and provide access to historical reference data, state data, and old, previously streamed data (i.e., data from previous hours, days, months, or years).

- Integrate closely with the application enablement and stream processing components (this is essential to the end-to-end high scalability and low latency of URP applications).

- Support hybrid or data tiering (optional) for better price-performance needs.

Positioning Relative to Other Streaming Infrastructure Products

There is considerable confusion in the market regarding the different kinds of products that support real-time analytics on streaming data, including (1) URPs, (2) event stream processing (ESP) platforms, (3) streaming DBMSs, and (4) stream-enabled analytical DBMSs. These products have overlapping capabilities and can be substituted for one another for some applications. All of these products are becoming more widely used because of the proliferation of streaming data and the increasing business requirements for smarter real-time applications. Next, we will drill down into the four categories to compare and contrast their characteristics.

URPs

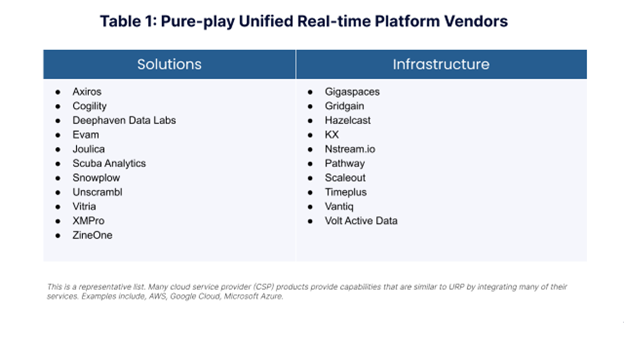

As described above, a URP is an end-to-end platform that combines an application engine and data management capabilities with provisions for handling real-time streams. Commercial URP products can be categorized as either general-purpose infrastructure software or domain-specific software solutions (which are built on embedded general-purpose, domain-independent URP infrastructure).

A solution is a set of features and functions, an application template, or a full (tailorable) commercial off-the-shelf (COTS) application or SaaS offering that is focused on a particular vertical or horizontal domain. URP solutions are available for various aspects of customer relationship management (CRM); supply chain management; (IoT) asset management; transportation operations (trucks, planes, airlines, maritime shipping); capital markets trading; AIOps; and other application areas.

Infrastructure products offer general-purpose URP capabilities that are suitable for use in many industries and applications. They are technically a subset of solutions because the user company or a third-party partner must build the application from scratch, whereas solutions should need less customization. This article is focused primarily on URP infrastructure. For more discussion of URP-based solutions, see How to Select a Unified Real-time Platform.

Table 1 is a representative sampling, not a comprehensive list of solution and infrastructure URPs.

ESP Platforms

The purpose of an ESP platform is specifically to process streaming data as it arrives, so it does not support interactive, request/reply application logic like an application platform would, nor general purpose long-term storage of streaming data, reference data, or business data of record. Nevertheless, it supports almost any kind of logic that a developer might want to apply to streaming data.

ESP platforms perform incremental computation on streams while the data is in motion and before it is stored in a separate database or file. ESP platforms keep some recent data in internal buffers (state stores) temporarily to support multistage real-time data flow pipelines (sometimes called jobs or topologies). They apply calculations on moving time windows of records (typically minutes or hours in duration), and may take checkpoints to enable faster restarts.

ESP platforms include Flink (from Aiven, Amazon, Apache, Confluent, Cloudera, and many other vendors), Arroyo (from Apache and Arroyo Systems), Axual KSML, Espertech Esper, Google Cloud Dataflow, Kafka Streams (from Apache and Confluent), Microsoft Azure Stream Analytics, SAS Event Stream Processing, Spark Streaming (from Apache, Databricks, and many others), TIBCO Streaming, and similar products.

Streaming DBMSs

Streaming DBMSs, such as DeltaStream, Materialize, and RisingWave, focus on the data management (storage and retrieval) aspect of applications. However, to accomplish this with streaming data, they also need to implement multistep internal pipelines to transform incoming streams in a manner somewhat similar to ESP platforms.

Some products are based on Differential DataFlow (DDF) concepts that incrementally materialize table views that are always current. As with ESP platforms, streaming DBMSs aren’t designed to support general purpose interactive, request/reply application logic or transaction processing applications like an application platform would.

Stream-enabled analytics DBMSs

Stream-enabled analytics DBMSs, such as Clickhouse, Druid, Imply, FeatureBase, Kinetica, Rockset, Startree (Apache Pinot), and Tinybird, support OLAP-type analytics on streaming data immediately after it has been stored. They also often manage large sets of historical data. They support canned or ad hoc interactive queries with very low latency by leveraging a variety of data models and indexing techniques.

Stream-enabled analytics DBMSs are used for real-time and near-real-time operational decisions, often using clickstreams, IoT sensor data, or other common high-volume streams. As with streaming DBMSs and ESP platforms, it is not a complete platform for interactive, transaction processing applications.

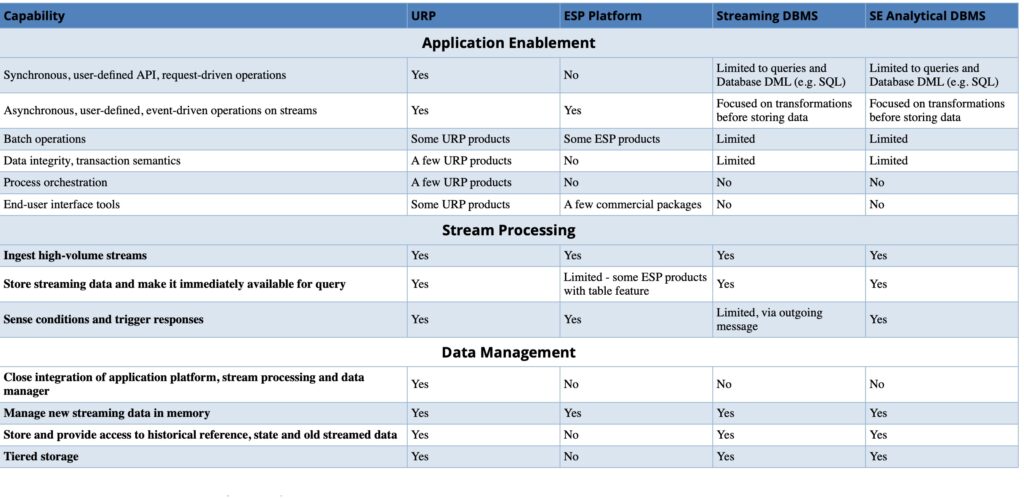

In Table 2, we summarize the key differences between these four product categories. The rows map to the URP capabilities described in the section “URP Characteristics” above. Note that we are generalizing here, and some products in each category may have capabilities or may lack capabilities that are not reflected in our table. If your application needs a specific capability, you should validate this rather than relying on the table.

Table 2: Key differences between popular types of software used for processing streaming data in real time. (View a larger version of the table here.)

Other Related Product Categories

This article deals with four particular product categories because they are relatively new and are focused directly on streaming analytics. However, we acknowledge that several other more established data management products and some new product types are also relevant for real-time analytics on streaming data. Many of these products are adding features and becoming more competitive for some of the applications that are addressed by the four product types described above. However, time and space pressures made it impractical for us to include them in this document. Such products include:

- In-memory data stores like Aerospike, memcached, and Redis.

- Time series DBMSs, such as Amazon Timestream, InfluxDB, Microsoft ADX, and TimescaleDB.

- Cloud data warehouses and lakehouses like Google BigQuery, Snowflake, and Databricks.

- Search-based tools, such as ChaosSearch, Elastic, and OpenSearch.

- Multimodel DBMSs, including Cassandra, MongoDB, Scylla, and Singlestore.

- New streaming graph data managers, such as thatDot platform, built on Quine.

- New vector databases like Pinecone convert Kafka topics into vector embeddings using an LLM in real time. Many other vector databases like Qdrant, Milvus, and Weviate also support streaming ingestion.