Confluent Platform 2.0, based on Kafka 0.9 Core, offers improved enterprise security and quality-of-service features.

Data management company Confluent has announced an update to their Apache Kafka platform. In a Dec. 8 release, the company said Confluent 2.0 boasts improved enterprise security and quality-of-service capabilities, as well as new features designed to boost and simplify development.

“Kafka is becoming much more popular, as evidenced by the growing number of tools that are out there, and it anchors a stream processing ecosystem that is changing how businesses process data,” said Doug Henschen, vice president and principal analyst at Constellation Research.

Confluent, which was founded by the creators of Kafka, said its Platform 2.0 includes a range of new developer-friendly features, including a new Java consumer that aims to simplify the development, deployment, scalability, and maintenance of consumer applications using Kafka. There’s also a supported C client for producer and consumer implementation.

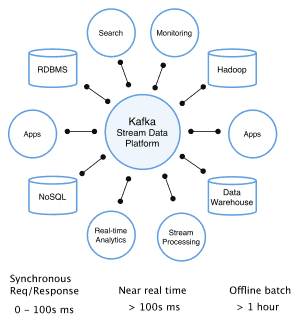

According to Confluent, businesses often use Kafka to build centralized data pipelines for microservices or enterprise data integration, such as high-capacity ingestion routes for Apache Hadoop or traditional data warehouse. Kafka is also used as a foundation for advanced stream processing using Apache Spark, Storm, or Samza.

> Read more: How MemSQL uses Kafka and Spark for clients such as Pinterest and Comcast

For enterprises, new features in Confluent 2.0 include:

- Kafka Connect: A new connector-driven data integration feature that eases large-scale, real-time data import and export for Kafka, enabling developers to easily integrate various data sources with Kafka without writing code, and boasting a 24/7 production environment including automatic fault-tolerance, transparent, high-capacity scale-out, and centralized management.

- Data Encryption over the wire using SSL.

- Authentication and authorization: Allows access control with permissions that can be set on a per-user or per-application basis.

According to Confluent, Kafka powers every part of LinkedIn’s business where it has scaled to more than 1.1 trillion messages/day. It also drives Microsoft’s Bing, Ads and Office operations at more than 1 trillion messages/day.

Want more? Check out our most-read content:

Frontiers in Artificial Intelligence for the IoT: White Paper

The Value of Bringing Analytics to the Edge

What’s Behind the Attraction to Apache Spark

IoT Hacking: Three Ways Data and Devices Are Vulnerable

Liked this article? Share it with your colleagues!